TLDR: MDST brings GGUF to WebGPU, the most popular format for LLMs, so anyone can create, edit, and review any files and collaborate from their browser without being dependent on cloud LLM providers or complicated setups.

In 2026, more people want local models that they can actually run and trust, and the hardware and software are finally catching up. Better consumer GPUs, new models and better quantizations are making “local” feel normal and accessible as never before.

So we built a WASM/JS engine that can run GGUF on WebGPU. The GGUF format is one of the most popular LLM formats and supports various quantizations. Shipped in a single-file container, it is best for consumer-grade devices and easy to download, cache, and tune.

We believe this will open a new, bigger market for GGUF: fast, local inference for anyone who just wants it to work, right in the browser.

What MDST is

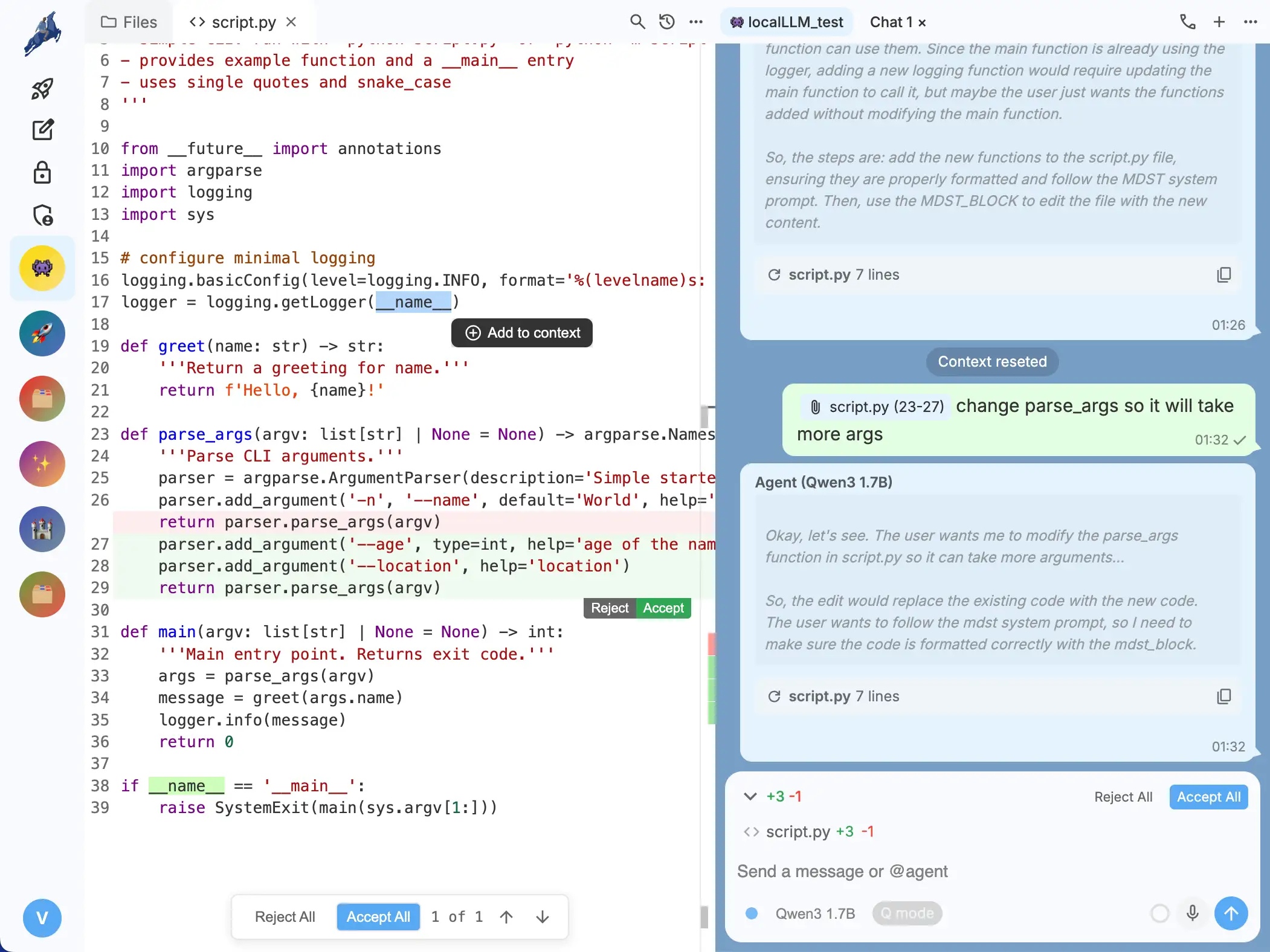

MDST is a free, agentic, secure, collaborative IDE, with cloud and local agentic inference integrated directly into the workspaces.

Instead of copying context between tools and teammates, MDST can sync, merge, and store everything inside one or many projects, with shared access to your files, history, and full context for your team, while keeping everything E2E encrypted and GDPR-compliant.

With MDST, you can:

- Download and run LLMs in your browser, within a click, no more complicated setups, anyone can do it from any device that supports WebGPU.

- Sync projects in real-time, with GitHub or local filesystem, with MDST you'll never lose your work or any changes you made.

- Stay stable under load, without getting locked into a single provider's API mood swings or quality downgrades.

- Keep files and conversations private, with end-to-end encryption as a first-class default. Signal-style privacy mode included.

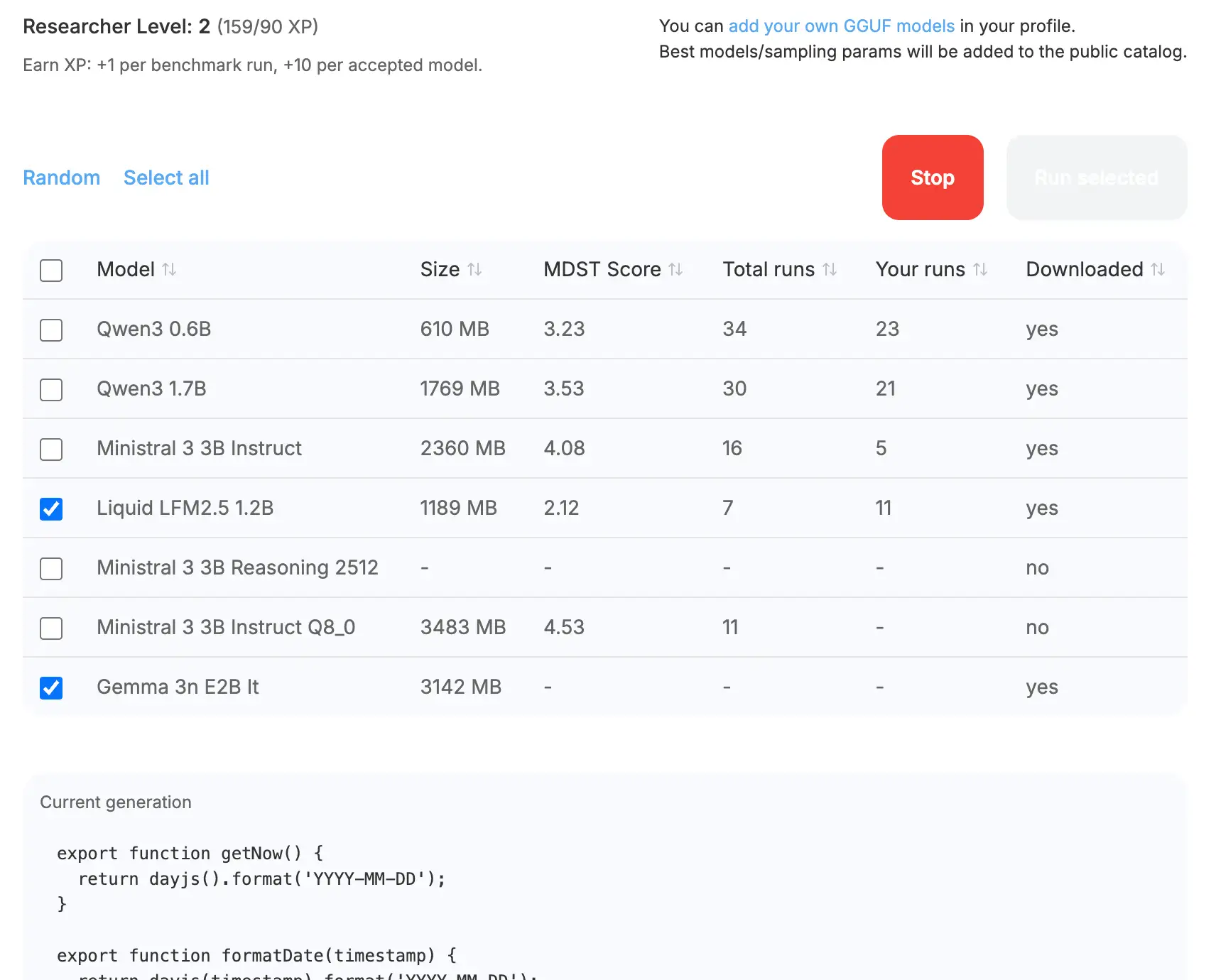

- Benchmark models where they run, with local runs feeding a public WebGPU leaderboard.

Research and learning for everyone

From now on, all you need for local inference, LLM learning, and research is a modern browser (Chrome, Safari, Edge supported, Firefox coming soon), a laptop that is five years old or newer (an M1 MacBook Air works well with small models), and a GGUF model.

Open the Research module and run the local benchmark suite across tasks and difficulty levels to test and promote your model or sampling parameters on the public WebGPU leaderboard.

Every run happens on your own machine, in your browser, and produces results that remain comparable over time. The leaderboard ranks models by a weighted score across benchmark tasks and difficulty levels, so higher-difficulty tasks matter more than easy wins:

Why now and what's next

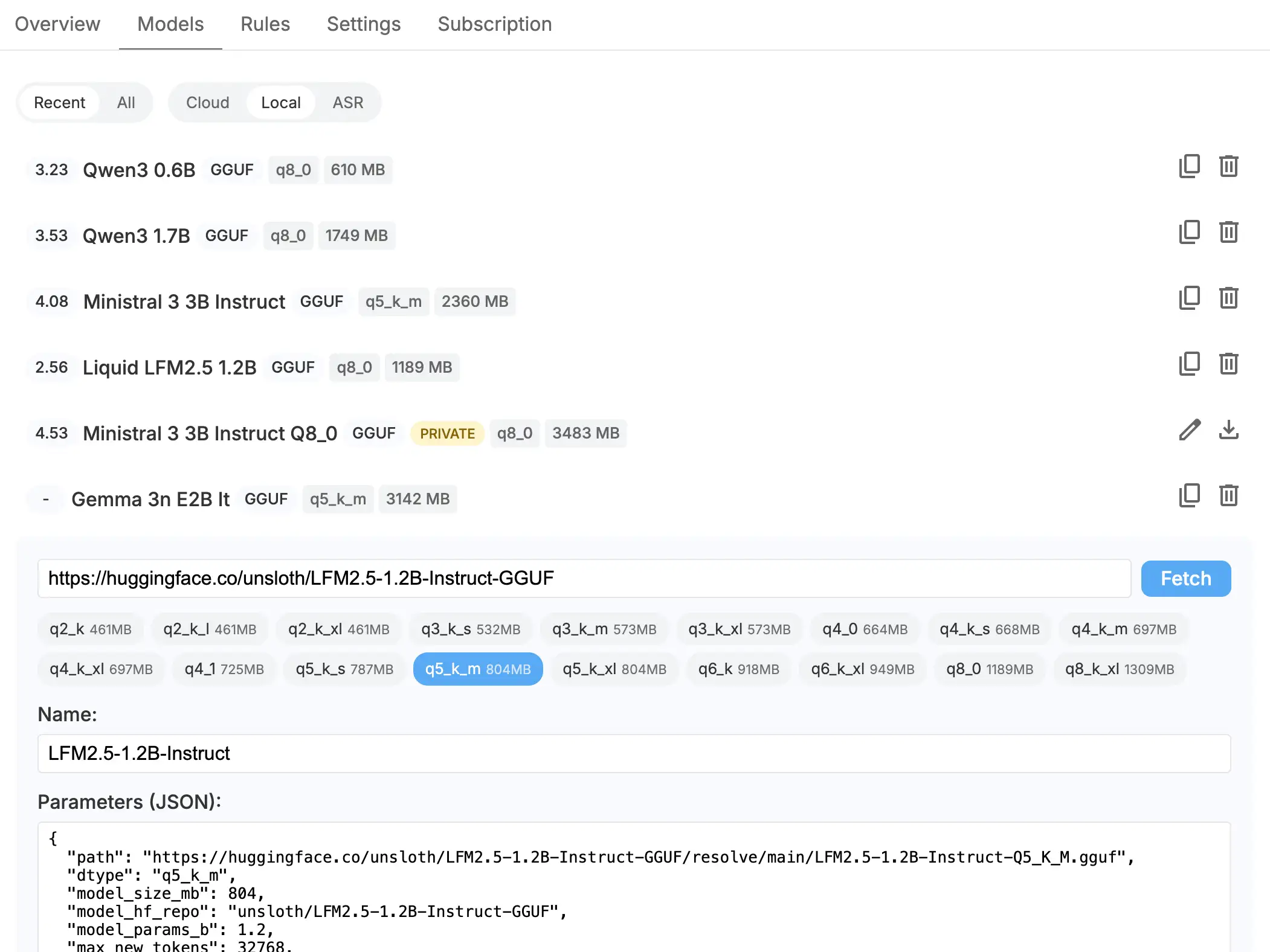

Right now MDST already supports these cloud and local LLM families in different quantizations, and we're working on adding more:

| Type | Family | Models |

|---|---|---|

| Cloud | Claude | Sonnet 4.5, Opus 4.6 |

| Cloud | OpenAI GPT | 5.2, 5.1 Mini, Codex 5.2 |

| Cloud | Gemini | 3 Pro Preview |

| Cloud | Kimi | K2 |

| Cloud | DeepSeek | V3.2 |

| Local GGUF | Qwen | 3 Thinking |

| Local GGUF | Ministral | 3 Instruct |

| Local GGUF | LFM | 2.5 |

| Local GGUF | Gemma | 3 IT |

Such a powerful combination gives users and us a lot of flexibility to choose the best model for the task at hand, and learn from experiments with different prompts and quantizations.

WebGPU is finally fast enough on mainstream hardware, and GGUF has become the simplest way to ship a quantized model as a single artifact. Put together, that makes real local inference in the browser practical and accessible for everyone, even on modest hardware.

Your feedback and support will show us what to optimize next. Please, share this post with the community — we'll appreciate your support!