In the newly released 1.0 version of Qodo Gen, our IDE plugin for AI coding and testing, we introduce agentic workflows that let AI make dynamic decisions to navigate complex coding tasks.

To enable agentic capabilities in Qodo Gen we restructured our infrastructure using two key technologies: LangGraph for structured agentic workflows and Anthropic’s Model Context Protocol (MCP) for standardized integration of external tools.

In this blog, we’ll walk through how we evolved our infrastructure for agentic flows, how we used LangGraph to orchestrate multiple-step flows and MCP to support extensible tooling.

Evolving infrastructure for agentic AI

As an early adopter of AI, we had built extensive logic to compensate for limitations of earlier LLMs which struggled with context retention, reasoning depth, and consistent output. We created a lot of additional logic to handle tasks like chunking data, manually injecting context, and re-checking responses.

In multi-step, agentic flows, the AI can dynamically decide which tools and data sources to use at each step, reducing the need for rigid, pre-scripted logic. Stronger models like Claude Sonnet further reduced the need for our earlier compensations, as they reasoning more effectively on their own. This allowed us to simplify our infrastructure while improving flexibility and accuracy.

Building this “smart chat” however required more than just hooking up an LLM. We needed a way to structure the logic, handle multiple steps, and let the AI call different tools across back and front end systems.

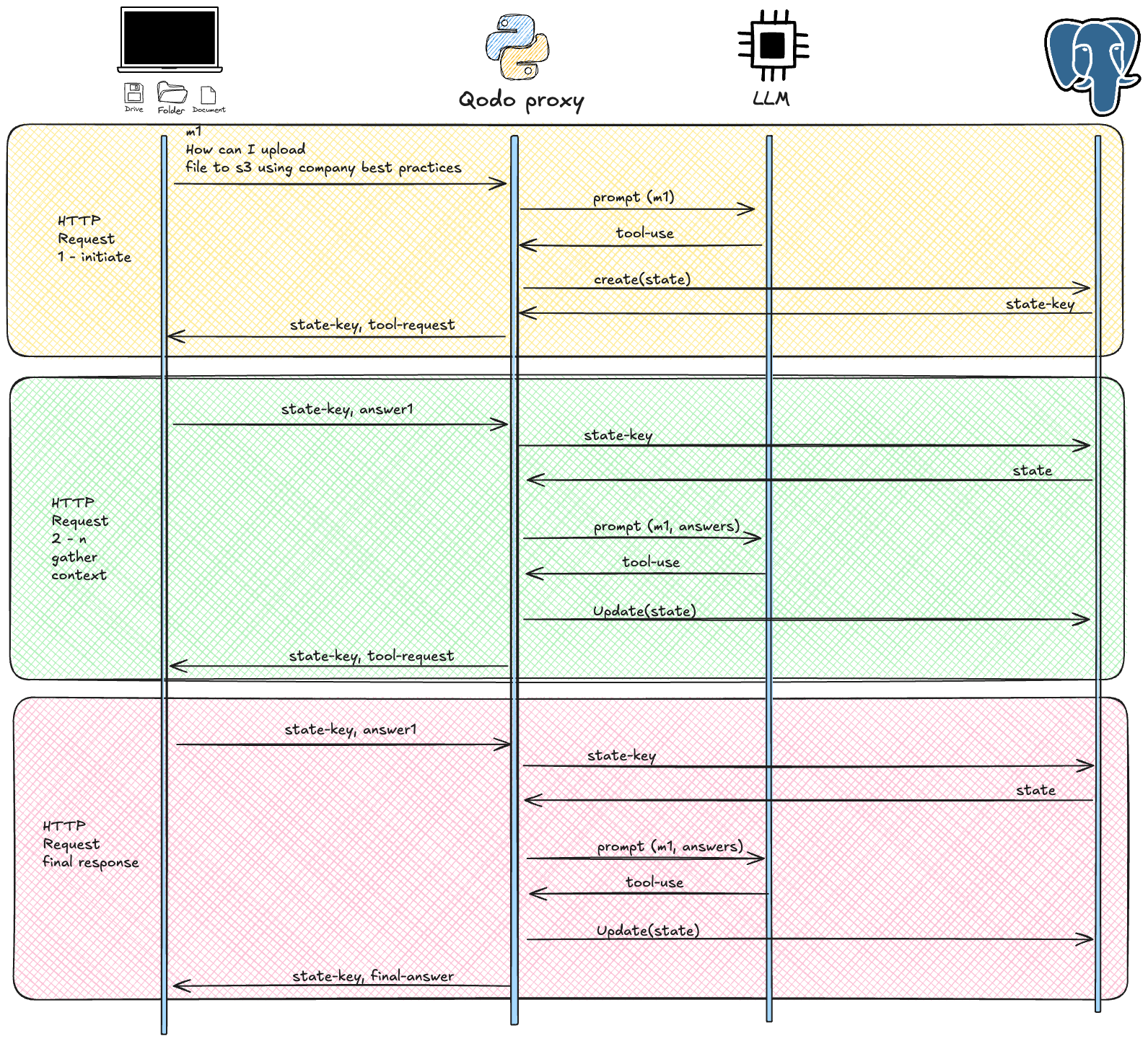

Asynchronous Communication

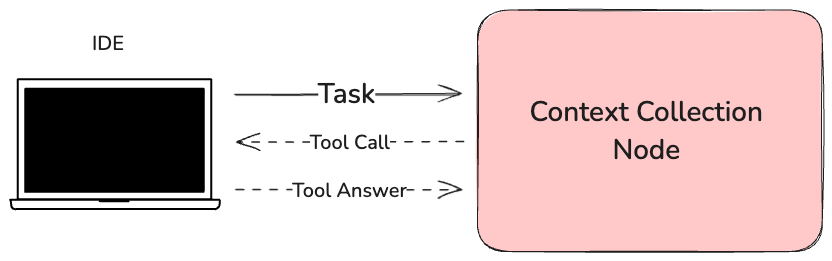

Agentic flows introduce bidirectional, asynchronous communication patterns and require the ability for the model to make multiple tool calls during a single “thinking” process, with each call potentially triggering complex workflows before returning results.

Our previous architecture, however, assumed synchronous flow: gather context, send to the LLM, receive response. This fundamentally different interaction pattern required a rethinking of our communication layers.

We implemented bi-directional asynchronous communication between IDEs and the backend, enabling seamless, real-time interactions essential for an agentic workflow. This allows the AI to dynamically request and process context, execute tools, and respond to user actions without blocking operations, improving responsiveness and efficiency.

Context Handling

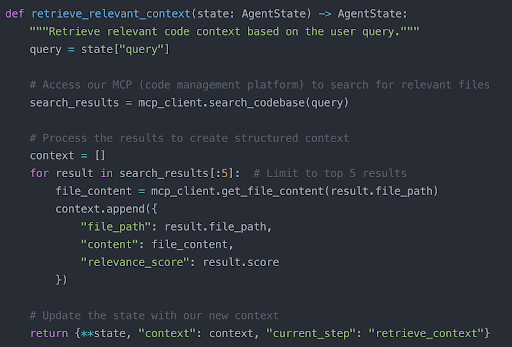

In initial versions of Qodo Gen, we were proactively gathering all possible context and filtering it before passing to the LLM. This approach was incompatible with agentic flows, which are designed to request specific information when needed, not receive everything all at once.

To support this on-demand context retrieval, we shifted to a more task-oriented architecture to let the LLM dynamically request exactly what it needs through tools based on the specific task and session state.

Error Handling and Reliability

Complex, asynchronous workflows have multiple potential failure points. When an LLM makes a tool call, that request can travel through several layers—from the orchestration layer, to the tool provider, and back again. Our previous error handling mechanisms were designed for synchronous interactions with fewer potential failure modes. We enhanced error handling systems to manage:

- Asynchronous communication failures – Implemented retries and fallback mechanisms to maintain stability.

- Tool execution errors – Introduced structured error reporting and failover strategies.

- Session state inconsistencies – Developed validation and recovery logic to maintain accurate user context.

- Database operations – Improved transactional integrity and redundancy measures to prevent data corruption or loss.

Orchestration and task management

A key change was adopting an orchestrator-workers model, where the central LLM can dynamically break down complex tasks and delegate them to various tools as needed. For example, when resolving a CI/CD issue, the LLM can analyze error logs, call a debugging tool, suggest fixes, and verify the solution—all in a coordinated sequence.

We also implemented an agent loop pattern, enabling the LLM to:

- Operate autonomously – Initiate and adjust tasks without manual intervention.

- Retrieve real-time “ground truth” – Continuously gather fresh data from the environment, such as live API responses or version control updates.

- Make informed decisions – Adjust strategies based on tool execution results, like retrying a failed test or modifying a deployment plan.

- Maintain session state – Persist context across iterations, ensuring consistency in multi-step processes like progressive code refactoring.

Using LangGraph to enable multi-step agentic flows

To support dynamic tool orchestration, we restructured Qodo Gen’s backend to integrate LangGraph to simplify low-level tasks and streamline agentic workflows.

Flexible graph-based infrastructure

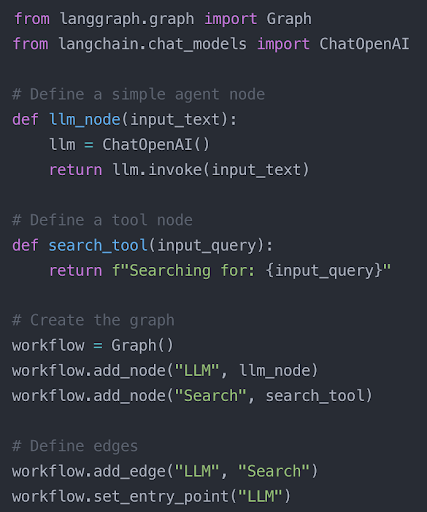

From the start, we knew we wanted a flexible approach that wouldn’t lock us into a rigid architecture. LangGraph’s graph-based structure allowed us to define complex workflows where each node can do something different—from calling the LLM to fetching data from a tool—without forcing us into a single linear chain of steps. We chose to use LangGraph without LangChain to maintain greater control over our execution logic and avoid unnecessary abstractions. This allows us to build exactly what we need without additional complexity or dependencies.

Here’s an example of defining a LangGraph workflow:

Built-in state management

One challenge in multi-step workflows is tracking intermediate outputs without us manually juggling state at every step. LangGraph provides built-in state management under the hood, all you need is a session-ID and the rest is taken care of. This allows us to keep each node’s data accessible to subsequent steps.

Effective agentic workflows also require state persistence across multiple interactions. Our previous design treated each interaction as mostly stateless, with minimal context carried forward.

To ensure reliable state management across extended agent interactions, we introduced persistent database storage for session data. We conducted extensive performance and stress testing to identify the optimal database solution, balancing speed, scalability, and reliability. This allows sessions to retain context across interactions, improving continuity in AI-assisted development.

With LangGraph as our framework, we had to define a structured system for AI interaction that relies on nodes, agents, and tools working together to make intelligent decisions.

Agent vs Nodes

An agent is the overall intelligent system that can make decisions about next steps. In our architecture, the entire LangGraph workflow functions as a unified agent, though individual nodes may have varying levels of autonomy.

Nodes are the building blocks of our graph. Each node in the LangGraph workflow is responsible for a specific action. We roughly categorize nodes into three types:

- Static nodes: Perform a straightforward, deterministic function (e.g., parse a file, do some quick formatting).

- LLM nodes: Call an LLM with a prompt to generate or refine text.

- Agentic nodes: Decides on their own which “tool” to call or how to proceed. These nodes have a bit more autonomy, often bridging the gap to external APIs or services.

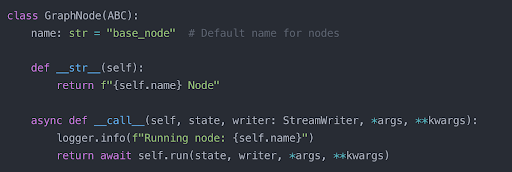

An example of a base node where each node implements the run method based on the node’s responsibility.

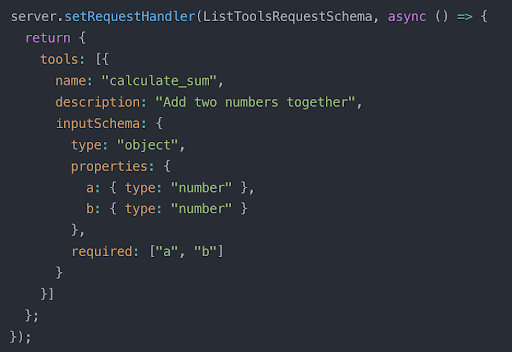

The MCP backbone for extensible tooling

Tools are the external capabilities the agent (or node) can use like fetching code artifacts, web search, or running database queries. A core concept in our implementation of this was the Model Context Protocol (MCP) from Anthropic. With MCP, Anthropic introduced a framework for managing communication between IDE’s, AI models and external tools and provided a way to expose external tool functionality to AI models.

MCP enables the creation of a plugin architecture where various coding tools (linters, compilers, source control, etc.) can be registered as capabilities available to the AI. A tool can be registered with appropriate metadata that describes:

- What the tool does

- Required inputs/parameters

- Expected outputs

- Error handling patterns

Tools expose their functionality through consistent JSON patterns that the AI can understand, for example:

The MCP structures how tool results are returned to the model in a standardized format:

This standardized JSON format eliminates the need for custom parsers and format converters for each tool integration. The real power of MCP is that it establishes a consistent communication protocol between AI models and tools within your system. Rather than creating bespoke interfaces for each integration, MCP provides a structured framework that makes adding new tools more straightforward and maintainable. This standardization significantly reduces integration overhead, and simplifies debugging.

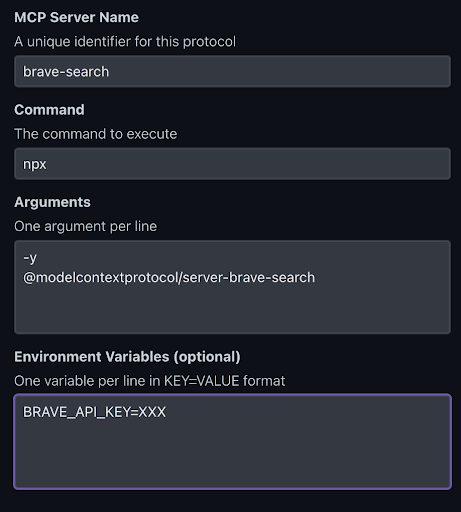

We integrated MCP with our LangGraph workflow, allowing all agent interactions with external tools to happen through standardized MCP communication protocols. This approach ensures that tool interactions follow consistent patterns while giving us the flexibility to add new capabilities (like searching Jira) without rewriting our core agent logic. Additionally, it allows users to bring their own MCP-compatible tools, significantly expanding the agent’s capabilities beyond what we could build ourselves. This architecture creates a powerful balance between extensibility and control, ensuring new tools can be easily integrated while maintaining a consistent interaction model.

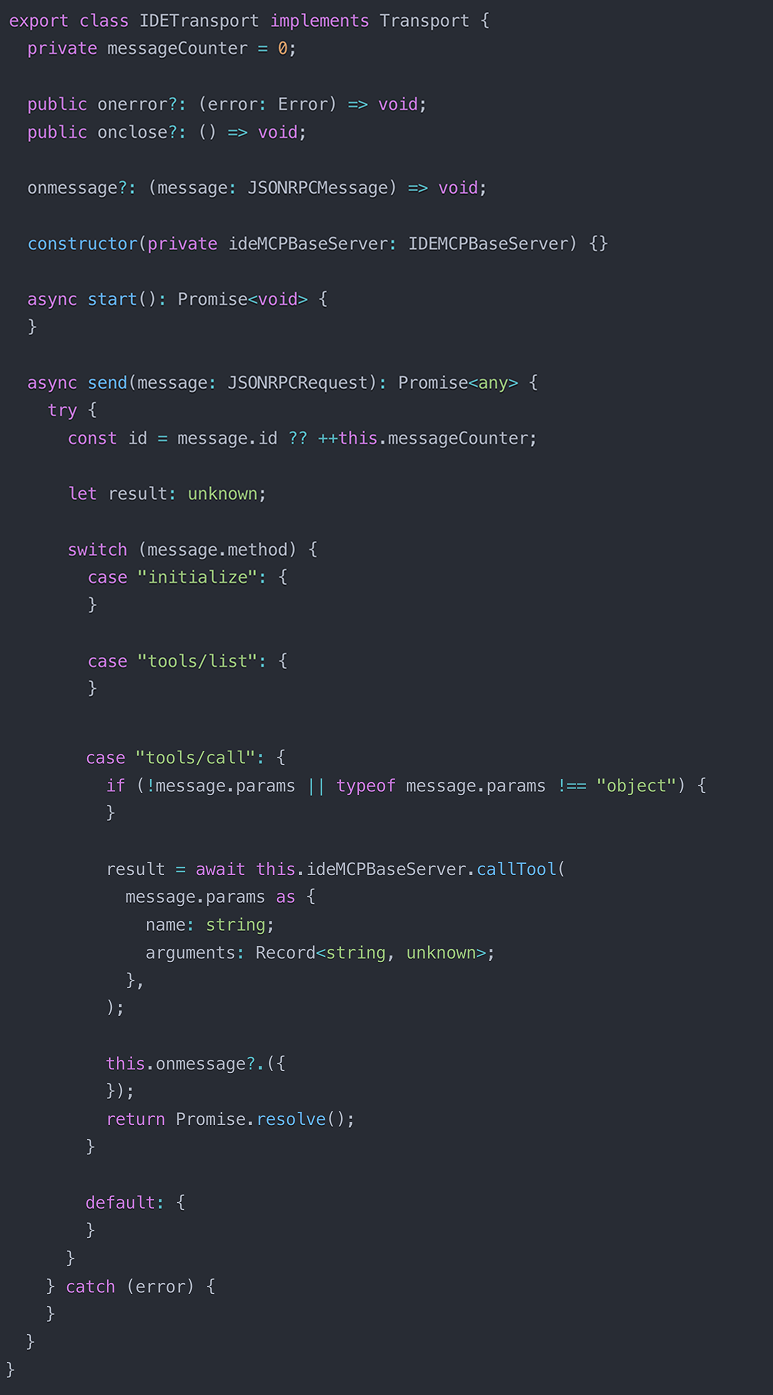

Here’s an example of integrating a custom tool:

A significant technical advancement in the implementation process was the development of custom transport layers. These custom transports proved instrumental in unifying the handling of both built-in IDE tools and user-provided custom tools through a single consistent interface.

This architectural approach enabled a powerful capability: agents could now successfully complete complex tasks by seamlessly combining built-in tools with user-provided custom tools. This integration unlocked access to previously inaccessible information sources, allowing agents to leverage both native IDE capabilities and external resources such as company-specific knowledge bases provided through user-created tools.

Agentic flows in practice

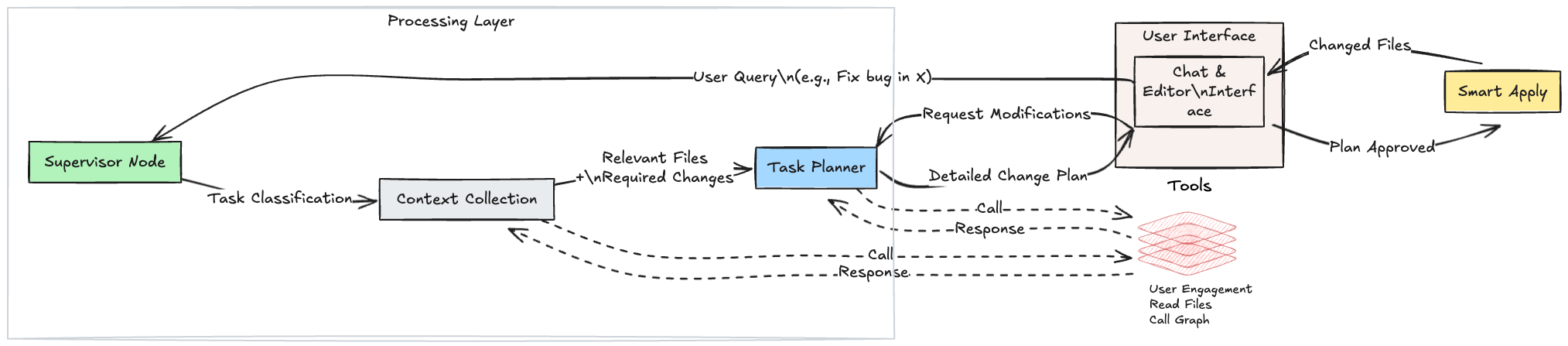

In our new workflow for agentic coding in Qodo Gen, the user’s request passes through a LangGraph that might:

- Call an LLM node to figure out the user’s intent.

- Use a tool to fetch relevant files from the local repo (via MCP).

- Summarize that code context (another LLM Node).

- Output a final result to the user—or decide it needs more context and loop back.

Controlling agent autonomy

One of our guiding principles is: “If you don’t need an LLM, don’t use an LLM.” We love generative models, but they’re non-deterministic and can be unpredictable, especially if they have free rein to call multiple tools in any sequence.

Instead of letting a single “all-knowing agent” rummage freely through every tool (“YOLO style”), we build more opinionated flows. Each flow or sub-agent has a specific purpose: gather context, answer the user’s question, or produce code for examples. This helps prevent bizarre or unexpected tool usage.

Ultimately, we’re incrementally building toward more advanced capabilities (like letting the AI automatically create or update tickets), but we want guardrails in place. LangGraph gives us that structural control.

Conclusion

By designing agentic workflows around flexibility and control, we’ve created an AI system that doesn’t just execute tasks but orchestrates complex development workflows. LangGraph provides the backbone for this, allowing us to expand AI’s role without sacrificing reliability, while MCP gives us full support for external tooling.

What this means for developers:

- More intelligent automation: AI selects the right tools and gathers relevant information.

- An extensible system: Workflows can evolve without disrupting the core infrastructure.

- A structured approach to AI autonomy: AI remains adaptable but within clear boundaries.

The future of developer tools isn’t just about incorporating more AI—it’s about orchestrating AI capabilities intelligently.