Authored by Mark Tapscott via The Epoch Times (emphasis ours),

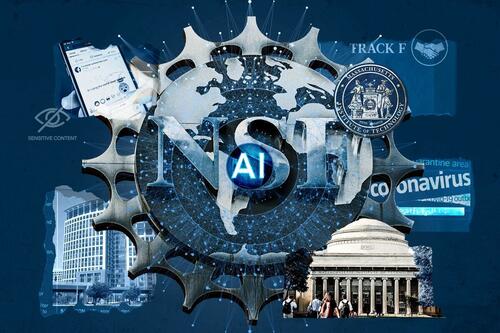

Officials from the National Science Foundation tried to conceal the spending of millions of taxpayer dollars on research and development for artificial intelligence tools used to censor political speech and influence the outcome of elections, according to a new congressional report.

The report looking into the National Science Foundation (NSF) is the latest addition to a growing body of evidence that critics claim shows federal officials—especially at the FBI and the CIA—are creating a “censorship-industrial complex” to monitor American public expression and suppress speech disfavored by the government.

“In the name of combatting alleged misinformation regarding COVID-19 and the 2020 election, NSF has been issuing multimillion-dollar grants to university and nonprofit research teams,” states the report by the House Judiciary Committee and its Select Subcommittee on the Weaponization of the Federal Government.

“The purpose of these taxpayer-funded projects is to develop AI-powered censorship and propaganda tools that can be used by governments and Big Tech to shape public opinion by restricting certain viewpoints or promoting others.”

The report also described, based on previously unknown documents, elaborate efforts by NSF officials to cover up the true purposes of the research.

The efforts included tracking public criticism of the foundation’s work by conservative journalists and legal scholars.

The NSF also developed a media strategy “that considered blacklisting certain American media outlets because they were scrutinizing NSF’s funding of censorship and propaganda tools,” the report said.

NSF Responds

In a statement to The Epoch Times, an NSF spokesman categorically rejected the report’s allegations.

“NSF does not engage in censorship and has no role in content policies or regulations. Per statute and guidance from Congress, we have made investments in research to help understand communications technologies that allow for things like deep fakes and how people interact with them,” the spokesman said.

“We know our adversaries are already using these technologies against us in multiple ways. We know that scammers are using these techniques on unsuspecting victims. It is in this nation’s national and economic security interest to understand how these tools are being used and how people are responding so we can provide options for ways we can improve safety for all.”

The spokesman also denied that NSF ever sought to conceal its investments in the so-called Track F program, and that the foundation does not follow the policy regarding media that was outlined in the documents discovered by the committee.

Track F Program Funding

The $39 million Track F Program is the heart of the congressional report’s analysis of a systematic federal effort to replace human “disinformation” monitors with AI-driven digital systems that are capable of vastly more comprehensive monitoring and censoring.

“The NSF-funded projects threaten to help create a censorship regime that could significantly impede the fundamental First Amendment rights of millions of Americans, and potentially do so in a manner that is instantaneous and largely invisible to its victims,” the congressional report warned.

During NSF’s solicitation and sifting of dozens of bids it received in response to its request for proposals, a University of Michigan team, with its “WiseDex” tool, pitched federal officials on enabling the government “to externalize the difficult responsibility of censorship.”

The Michigan team was one of four Track F funding recipients spotlighted by the congressional report. A total of 12 recipients were involved in Track F funding and activities.

The second of the four spotlighted teams is from Meedan, a San Francisco-based group that describes itself as “a global technology not-for-profit that builds software and programmatic initiatives to strengthen journalism, digital literacy, and accessibility of information online and off. We develop open-source tools for creating and sharing context on digital media through annotation, verification, archival, and translation.”

In fact, according to the congressional report, Meedan’s Co-Insights Program uses AI to identify and counter “misinformation” on a massive scale.

In one illustration that the group provided to NSF in its funding pitch, was to “crawl” more than 750,000 blogs and media articles on a daily basis for misinformation and fact-checking on themes such as “undermining trust in mainstream media,” “fear-mongering and anti-Black narratives,” and “weakening political participation.”

The Co-Insights Program, according to the congressional report, was “part of a much larger, long-term goal by the nonprofit. As [Scott] Hale, the director of research at Meedan, explained in an email to NSF, in his ‘dream world,’ Big Tech would collect all of the censored content to enable ‘disinformation’ researchers to use that data to create ‘automated detection’ to censor any similar speech automatically.”

The third spotlighted team is from the University of Wisconsin and its CourseCorrect tool that received $5.75 million in NSF funding “to develop a tool to ‘empower efforts by journalists, developers, and citizens to fact-check delegitimizing information’ about ‘election integrity and vaccine efficacy’ on social media.”

The tool “would allow ‘fact-checkers to perform rapid-cycle testing of fact-checking messages and monitor their real-time performance among online communities at-risk of misinformation exposure,’” the congressional report said.

‘Effective Interventions’ to Educate Americans

The Massachusetts Institute of Technology (MIT) team that developed its “Search Lit” tool with government funding was the fourth of the highlighted NSF grant recipients.

Officials with NSF asked the MIT team “to develop ‘effective interventions’ to educate Americans—specifically, those that the MIT researchers alleged ’may be more vulnerable to misinformation campaigns’—on how to discern fact from fiction online.

“In particular, the MIT team believed that conservatives, minorities, and veterans were uniquely incapable of assessing the veracity of content online,” the congressional report noted.

“In order to build a ’more digitally discerning public,' the Search Lit team proposed developing tools that could support the government’s viewpoint on COVID-19 public health measures and the 2020 election.”

In a study by one of the MIT team’s members, people who hold as sacred certain texts and documents, most notably the Bible and the U.S. Constitution, were described as “‘often focused on reading a wide array of primary sources, and performing their own synthesis,’ further alleging that, ‘unlike expert lateral readers,’ the conservative respondents made ‘no such effort’ to “eliminate bias that might skew results from search terms.”

Read more here...