Tech companies focused on chatbot development, like OpenAI, Google, and Anthropic, have faced significant near-term headwinds in advancing large language models. Despite tens of billions of dollars in investments, these tech firms are experiencing diminishing returns in advancing more sophisticated LLMs.

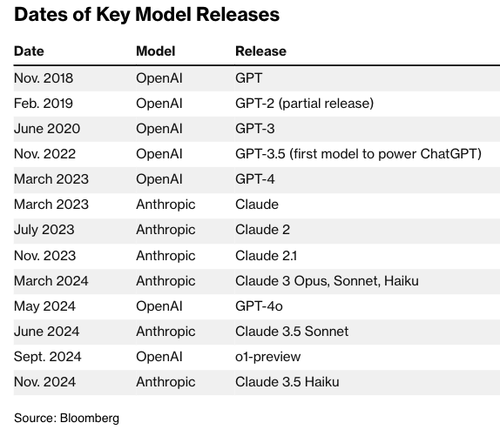

Sources told Bloomberg that OpenAI's new Orion LLM has experienced performance limitations. This means the new LLM would outperform the firm's existing models, but it does not mean there will be a significant leap in development, like that of GPT-3 to GPT-4.

As of late summer, for example, Orion fell short when trying to answer coding questions that it hadn't been trained on, the people said. Overall, Orion is so far not considered to be as big a step up from OpenAI's existing models as GPT-4 was from GPT-3.5, the system that originally powered the company's flagship chatbot, the people said. -BBG

The breakneck pace of developing more sophisticated LLMs appears to have also hit a proverbial brick wall at Google, in which its Gemini software has not lived up to expectations, according to sources, adding Anthropic also faces challenges with its long-awaited Claude model called 3.5 Opus.

Bloomberg noted one of the top obstacles these tech firms have encountered has been "finding new, untapped sources of high-quality, human-made training data that can be used to build more advanced AI systems."

Sources said Orion's dismal coding performance was due to the lack of sufficient coding data to train the LLM. They added that even though the model has improvements compared to legacy ones, it has become increasingly challenging to justify the massive costs of building and operating new models.

The setbacks may reveal an inconvenient truth for the tech industry plowing tens of billions of dollars into AI data centers and infrastructure, and the feasibility of reaching artificial general intelligence in the near future might be a pipedream.

John Schulman, cofounder and research scientist at OpenAI who recently left, said AGI could be achieved within a few years. Dario Amodei, CEO of Anthropic, believes it could be achieved by 2026.

However, Margaret Mitchell, the chief ethics scientist at AI startup Hugging Face, pointed out, "The AGI bubble is bursting a little bit," adding that "different training approaches" may be needed for progress.

In a recent interview with Lex Fridman, Anthropic's Amodei said there are "lots of things" that could "derail" the AI progression, including the possibility that "we could run out of data." However, he was optimistic that AI researchers would overcome any hurdles.

Scaling efforts are slowing...

"It is less about quantity and more about quality and diversity of data," said Lila Tretikov, head of AI strategy at New Enterprise Associates and former deputy CTO at Microsoft.

Tretikov said, "We can generate quantity synthetically, yet we struggle to get unique, high-quality datasets without human guidance, especially when it comes to language."

Moving forward, Noah Giansiracusa, an associate professor of mathematics at Bentley University in Waltham, Massachusetts, said AI models will continue to improve, but the hypergrowth in recent years is unsustainable:

"We got very excited for a brief period of very fast progress. That just wasn't sustainable."

If tech firms are struggling to advance LLM performance, this raises serious doubts about whether large investments can continue to be made in AI infrastructure.

"The infrastructure build for AI is the bubble. The AI 2.0 companies that can actually figure out a way to monetize it are the investments years from now. Might as well light a match to this fund. The infrastructure build like the telecom infrastructure during the dotcom boom will be oversupplied and pricing will collapse," Edward Dowd recently noted on X.

Well Nvidia has soared to new highs.

AI companies struggling to develop more advanced LLMs is undoubtedly an ominous sign for the AI bubble.