Big tech companies are aggressively investing billions of dollars in AI data centers to meet the escalating demand for computational power and infrastructure necessary for advanced AI workloads.

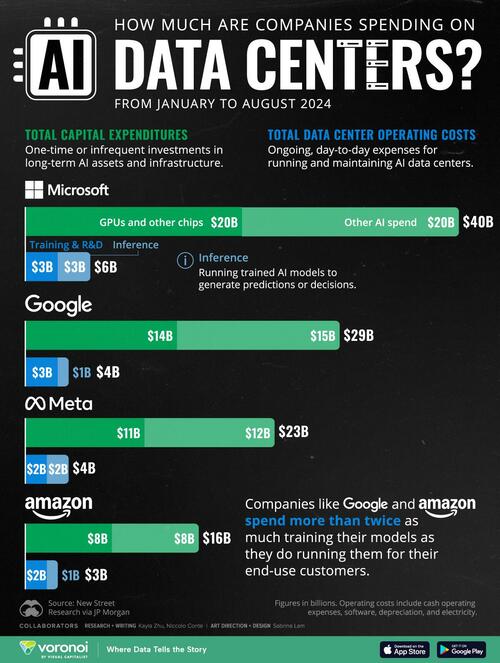

This graphic, via Visual Capitalist's Kayla Zhu, visualizes the total AI capital expenditures and data center operating costs for Microsoft, Google, Meta, and Amazon from January to August 2024.

AI capital expenditures are one-time or infrequent investments in long-term AI assets and infrastructure.

Data center operating costs are ongoing expenses for running and maintaining AI data centers on a day-to-day basis

The data comes from New Street Research via JP Morgan and is updated as of August 2024. Figures are in billions. Operating costs include cash operating expenses, software, depreciation, and electricity.

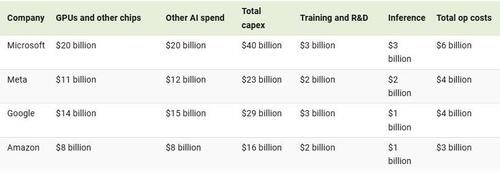

Below, we show the total AI capital expenditures and data center operating costs for Microsoft, Google, Meta, and Amazon.

Microsoft currently leads the pack in total AI data center costs, spending a total of $46 billion on capital expenditures and operating costs as of August 2024.

Microsoft also currently has the highest number of data centers at 300, followed by Amazon at about 215. However, variations in size and capacity mean the number of facilities doesn’t always reflect total computing power.

In September, Microsoft and BlackRock unveiled a $100 billion plan through the Global Artificial Intelligence Infrastructure Investment Partnership (GAIIP) to develop AI-focused data centers and the energy infrastructure to support them.

Notably, both Google and Amazon currently spend more than twice as much training their models as they do running them for their end-use customers (inference).

The training cost for a major AI model is getting increasingly expensive, as it requires large data sets, complex calculations, and substantial computational resources, often involving powerful GPUs and significant energy consumption.

However, as the frequency and scale of AI model deployments continue to grow, the cumulative cost of inference is likely to surpass these initial training costs, which is already the case for OpenAI’s ChatGPT.

To learn more about data center distribution in the U.S., check out this graphic that shows the percentage of U.S. states’ electricity that data centers consumed.