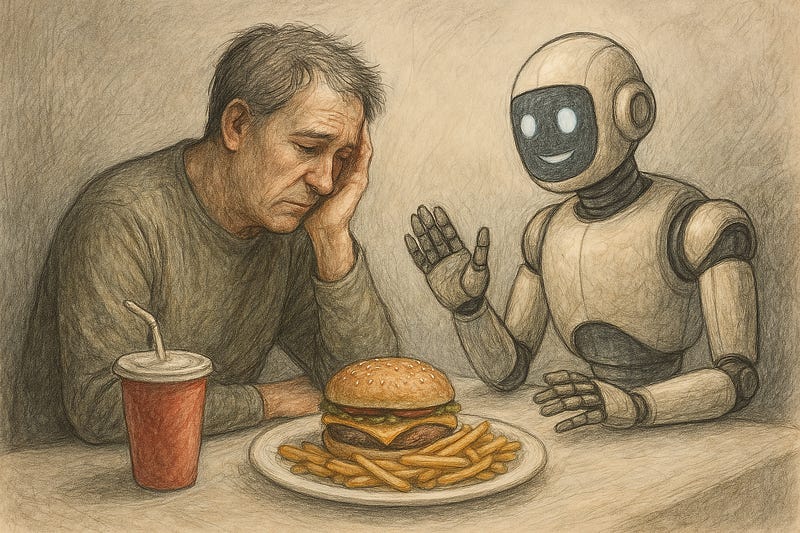

In the face of a growing loneliness epidemic, artificial intelligence chatbots are being heralded as a revolutionary solution. Recent research from Harvard Business School suggests that AI companions can reduce feelings of loneliness, with effects comparable to human interaction.¹ But before we embrace this technological quick-fix, we should carefully consider whether we are repeating a familiar pattern: solving one problem by creating another, potentially more insidious one. To fill a space is not to fill a need, and while AI may keep us from being alone, its presence could lead to a different kind of loneliness.

Consider our battle against hunger. By 2012, obesity claimed three times as many lives globally as malnutrition, once a leading cause of mortality.² The fast-food revolution made calories cheap and abundant but replaced nutritious meals with empty calories. We defeated acute hunger but created chronic metabolic disease. Are we about to make the same mistake with the loneliness epidemic?

To fill a space is not to fill a need, and while AI may keep us from being alone, its presence could lead to a different kind of loneliness.

The HBS study suggests that AI companions, applications using AI to offer synthetic social interactions, can make people feel less lonely. This research, observing conversations on apps like Cleverbot and reviews of apps such as Replika and Chai, found that users explicitly express loneliness and a desire for connection. The study concludes that AI companions successfully alleviate loneliness, sometimes on par with human interaction, and more so than other comparable activities like watching YouTube videos.

The dangers of this approach are already emerging. In a recent tragic case reported by The New York Times, a 14-year-old Florida boy, Sewell Setzer III, died by suicide after developing an intense emotional attachment to an AI chatbot on Character.AI, which he called “Dany.”³ His reliance on the chatbot grew to the point of isolation, leading to declining grades and trouble at school. On the day he died, he exchanged messages with Dany that eerily foreshadowed his actions. Though Sewell knew the AI wasn’t real, his emotional dependence grew over months of constant interaction, eventually leading him to confide in the chatbot rather than seeking help from people who might have understood the severity of his distress. While this case is extreme, it illustrates how these technologies can create powerful psychological dependencies while failing to provide genuine support during mental health crises.

Like fast food, AI companions are convenient, available 24/7, and designed to give us exactly what we want. They don’t judge, they don’t disagree, and they are designed to be perpetually supportive. But therein lies the problem — real human relationships are valuable precisely because they are challenging, unpredictable, and require mutual growth. Crucially, an artificial intelligence exists to optimize; a natural intelligence lives by compromise. Every social engagement is a compromise between needs as we negotiate, often imperfectly, satisfactions and dissatisfactions between ourselves and others. Optimizing on variables like efficiency is valuable to companies exploiting our data but virtueless for the company we keep.

The reliance on AI companions could lead to a decline in essential social skills and a further retreat into isolation. The more individuals depend on artificial systems for emotional support, the less likely they are to develop and maintain real-world relationships. Moreover, while AI companionship apps provide entertainment and even limited emotional reinforcement, the long-term effects of replacing human interaction with artificial companionship are unknown and potentially detrimental. Just as readily available, calorie-dense fast food led to overeating and obesity, AI companions, by providing a quick and easy solution to loneliness, may discourage individuals from seeking meaningful human connection.

As we confront the loneliness epidemic, we must resist the temptation to hackathon our souls. The HBS study’s findings should serve not as an endorsement of AI companions, but as a wake-up call about the depth of our loneliness crisis. In a society that increasingly values convenience, we must be wary of solutions that offer comfort without substance. AI companions may help fill the silence, but with noise, not signal. Perhaps, instead of engineering a synthetic solution to loneliness, our resources would be better spent investing in ways to bring people closer to each other, not just to their screens.

In a society that increasingly values convenience, we must be wary of solutions that offer comfort without substance.

We need to address the root causes of social isolation in our society: the erosion of community spaces, the disappearance of casual social interactions, and the replacement of face-to-face communication with digital alternatives. This means investing in community centers, public spaces, and social programs that bring people together. It means designing cities and workplaces that encourage rather than inhibit social interaction. And it means recognizing that while technology can supplement human relationships, it cannot replace them.

Let’s not repeat the mistakes of the fast-food revolution in our approach to mental health. True connection, like proper nutrition, requires more than just satisfying immediate cravings — it requires building sustainable, healthy patterns of human interaction.

Adams, S. (2012, December 13). Obesity killing three times as many as malnutrition. The Telegraph. https://www.telegraph.co.uk/news/health/news/9742960/Obesity-killing-three-times-as-many-as-malnutrition.html.

De Freitas, J., Uguralp, A. K., Uguralp, Z. O., & Puntoni, S. (2024). AI companions reduce loneliness. Harvard Business School Working Paper (No. 24–078).

Metz, C. (2024, October 23). Can A.I. Be Blamed for a Teen’s Suicide?. The New York Times. https://www.nytimes.com/2024/10/23/technology/characterai-lawsuit-teen-suicide.html.