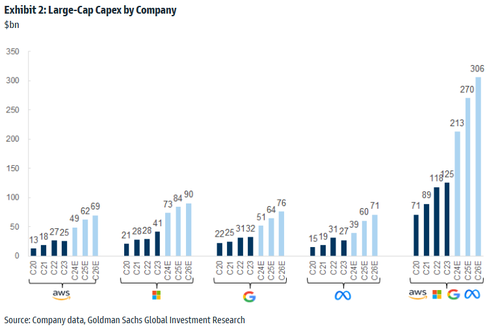

Goldman analysts led by George Tong returned to Silicon Valley for their second AI field trip, meeting with AI startups, public companies, VCs, and professors from Stanford, UCSF, and UC Berkeley to assess whether corporate America is truly embracing generative AI. The visit comes as record AI capex fuels record hyperscale data center buildouts nationwide, while investors search for clues on whether the adoption phase will materialize: a shift beyond infrastructure into the application layer.

"Insights indicate AI labs are expanding from the infrastructure layer to the application layer and LLM costs are sharply declining though capex may continue to rise as Gen AI usage and adoption grows," Tong wrote in a note to clients on Friday.

He continued: "Academic research on LLM technologies could further bring down costs. While software development costs are falling and increasing competitive and pricing risks, moats in application AI and SaaS companies include broader user distribution, engagement with power users to drive reinforcement learning from feedback loops, integration into workflows and leveraging proprietary data."

Tong's discussions with Silicon Valley business and academic leaders point to an acceleration in generative AI adoption starting in 2026.

Here's a summary of the findings:

Shift from infrastructure to applications: AI innovation is moving beyond chips and cloud (Nvidia, GPUs, etc.) toward actual end-user applications and vertical software solutions.

LLM costs are sliding: Training and using large language models is getting cheaper, though capex will still rise as usage expands. Academia is helping reduce costs: University research may accelerate efficiency gains in AI models.

Software development deflation: Building with AI is cheaper and faster, but that means higher competition and pricing pressure for software companies.

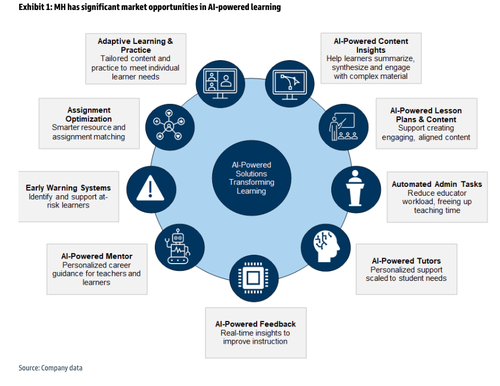

Tong said the conversations in Silicon Valley point to "positive implications" for S&P Global, Moody's, Iron Mountain, Verisk Analytics, and Thomson Reuters. He noted that his team has initiated coverage on McGraw-Hill with a "Buy" rating and a $27 12-month price target based on a "digital transformation" in the education space.

The analyst provided clients with a "chart of the week" that showed how McGraw-Hill is leveraging AI to improve product efficacy and drive growth.

Is the AI rate adoption (read here) enough to justify this record capex spending (more details here) by hyperscalers?

Let's hope so, or AI stocks face a hefty correction.

More in the full Goldman note available to pro subs.

Loading recommendations...