Gain visibility and control over your LLM usage. any-llm-gateway adds budgeting, analytics, and access management to any-llm, giving teams reliable oversight for every provider.

Track Usage, Set Limits, and Deploy Confidently Across Any LLM Provider

Managing LLM costs and access at scale is hard. Give users unrestricted access and you risk runaway costs. Lock it down too much and you slow down innovation. That's why today we’re happy to announce that we have open-sourced and are releasing any-llm-gateway!

We recently released version 1.0 of any-llm: a Python library that provides a consistent interface across multiple LLM providers (OpenAI, Anthropic, Mistral, your local model deployments, and more). Today we're excited to announce any-llm-gateway: a FastAPI-based proxy server that adds production-grade budget enforcement, API key management, and usage analytics on top of any-llm's multi-provider foundation.

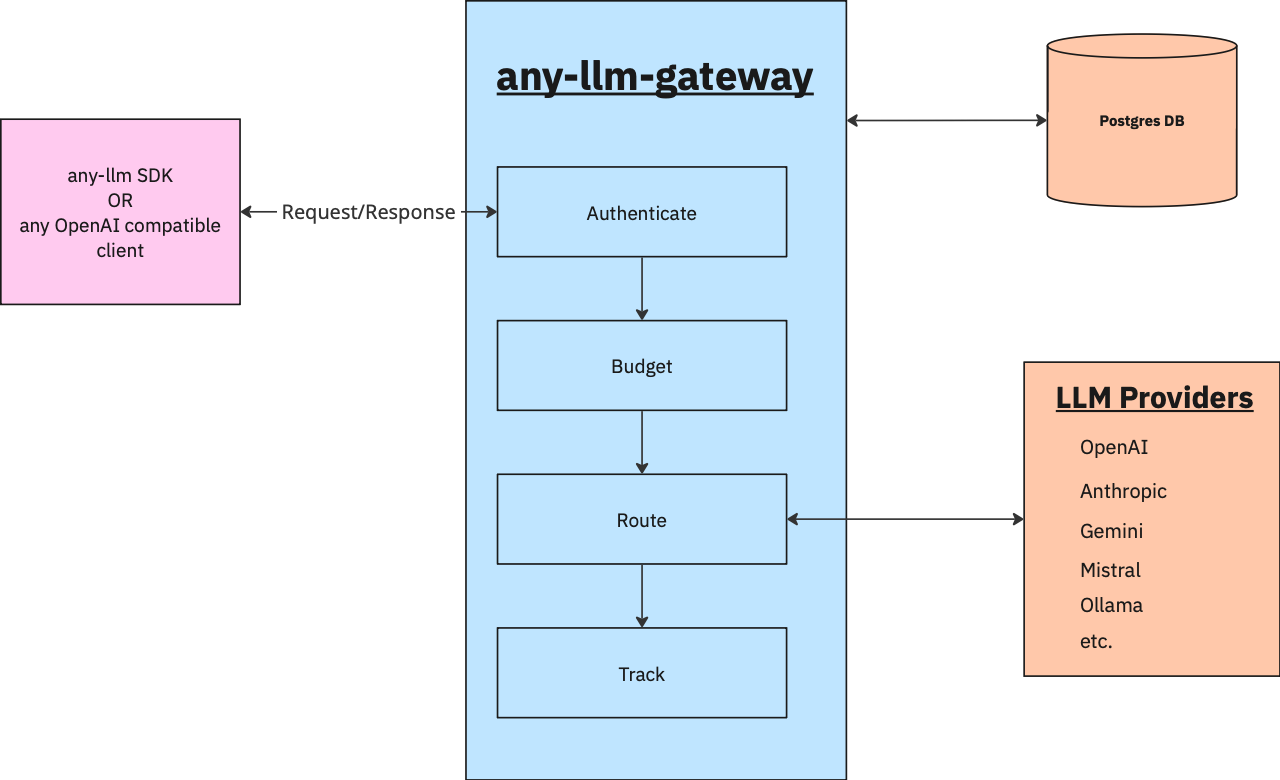

any-llm-gateway sits between your applications and LLM providers, exposing the OpenAI-compatible Completions API that works with any supported provider. Simply specify models using the provider:model format (e.g., openai:gpt-4o-mini, anthropic:claude-3-5-sonnet-20241022) and any-llm-gateway handles the rest, including streaming support with automatic token tracking.

Key Features

Smart Budget Management

Create shared budget tiers with automatic daily, weekly, or monthly resets. Budgets can be shared across multiple users, enforced automatically, or set to tracking-only mode. No manual intervention required.

Flexible API Key System

Choose between master key authentication (ideal for trusted services) or virtual API keys. Virtual keys can have expiration dates, metadata, and can be activated, deactivated, or revoked on demand, all while automatically associating with users for spend tracking.

Complete Usage Analytics

Every request is logged with full token counts, costs (with admin configured per-token costs), and metadata. Track spending per user, view detailed usage history, and get the observability you need for cost attribution and chargebacks.

Production-Ready Deployment

Deploy with Docker in minutes, configure via YAML or environment variables, and scale with Kubernetes-ready built-in liveness and readiness probes.

Getting Started

The fastest way to try any-llm-gateway is to head over to our quickstart, which guides you through configuration and deployment.

Check out our documentation for comprehensive guides on authentication, budget management, and configuration. We've also updated the any-llm SDK so you can easily connect to your gateway as a client.

Whether you're building a SaaS application with tiered pricing, managing LLM access for a research team, or implementing cost controls for your organization, any-llm-gateway provides the infrastructure you need to deploy, budget, monitor, and control LLM access with confidence.

Get started today: https://mozilla-ai.github.io/any-llm/gateway/quickstart/