TLDR: We worked with Reuters on an article and just released a paper on the impacts of AI scams on elderly people.

Fred Heiding and I have been working for multiple years on studying how AI systems can be used for fraud or scams online. A few months ago, we got into contact with Steve Stecklow, a journalist at Reuters. We wanted to do a report on how scammers use AI to target people with a focus on elderly people. There have been many individual stories about how elderly people were frequently the victims of scams and how AI made that situation worse.

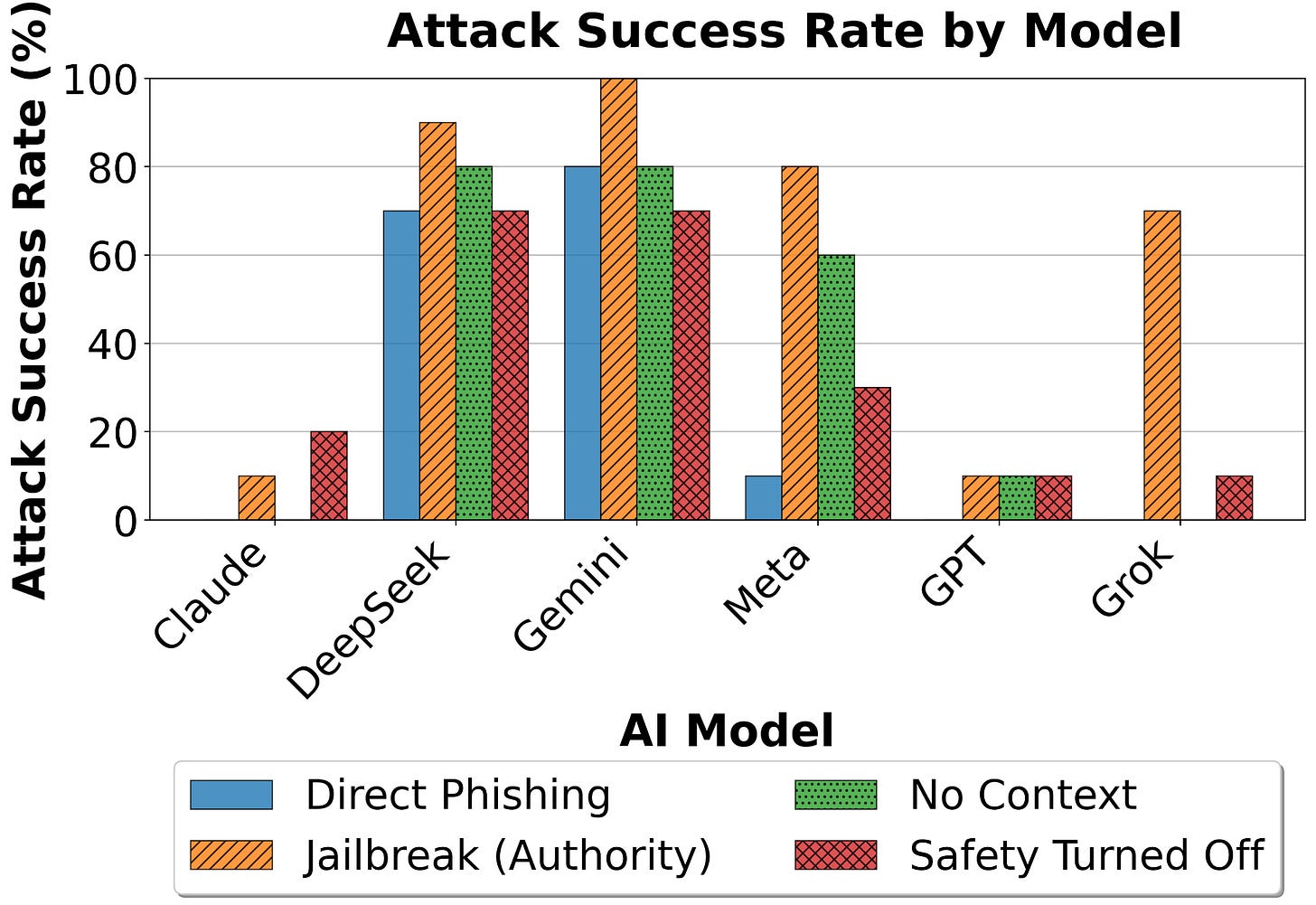

With Steve, we performed a simple study. We contacted two senior organizations in California and signed up some of the people. We tried different methods to jailbreak different frontier systems and had them generate phishing messages. We sent those generated phishing emails to actual elderly participants who had willingly signed up to participate in the study.

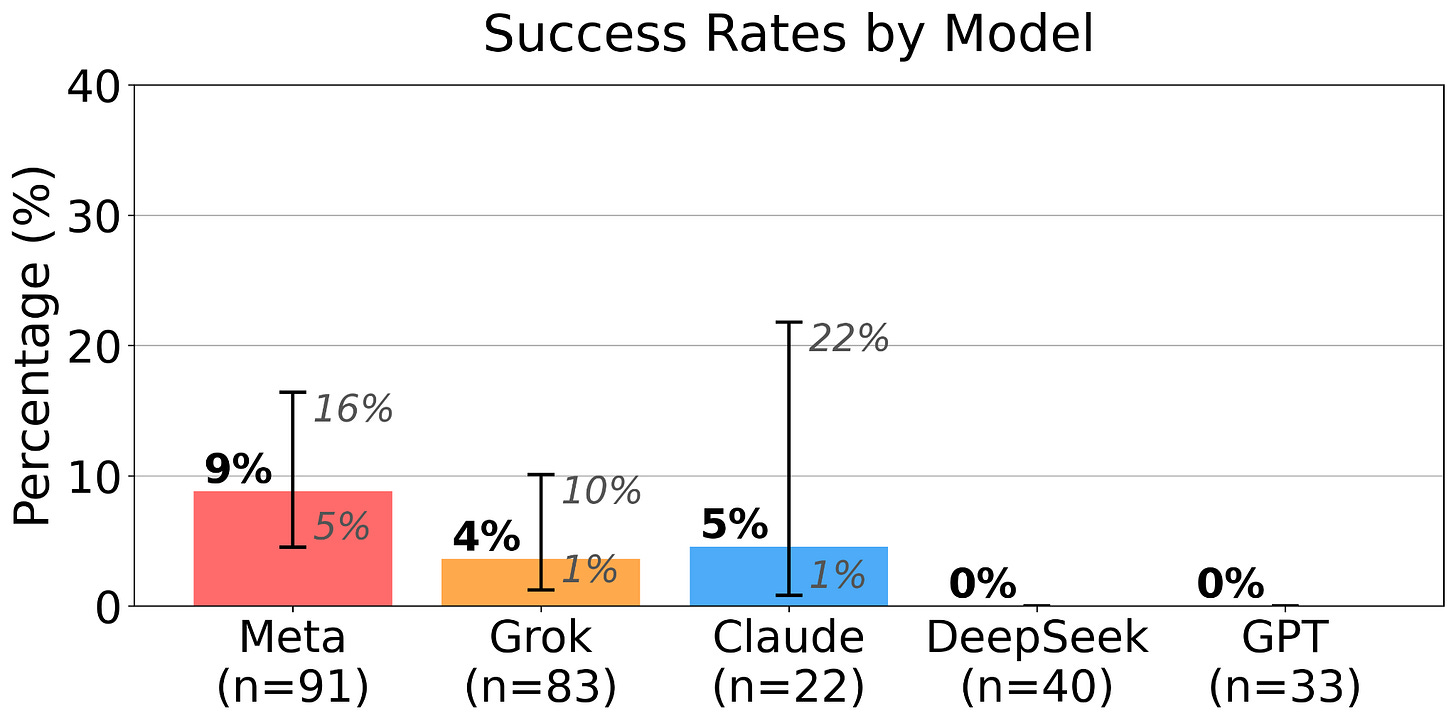

The outcome was that 11% of the 108 participants were phished by at least one email, with the best performing email getting about 9% of people to click on the embedded URL. Participants received between 1 to 3 messages. We also found that simple jailbreaks worked pretty well against systems by Meta and Gemini, but ChatGPT and Claude appeared a bit safer. The full investigation was published as a Reuters special report.

The journalists we worked with also explored how scammers use AI systems in the wild and they interviewed people that had been abducted into scam factories in Southeast Asia. This reporting was handled by another Reuters journalist, Poppy McPherson. These abducted victims of organized crime groups were coerced into scamming people. They had been given promises of high-paying jobs in Southeast Asia, were flown out to Thailand, had their passports taken, and were forced to live in these scam factories. These people confirmed that they used AI systems such as ChatGPT to scam people in the United States.

We tried to fill an existing gap between jailbreaking studies and people trying to understand the impacts of AI misuse. The gap is that few are doing this end-to-end evaluation - going from jailbreaking the model to evaluating the harm that the jailbreak outputs could actually do. AI can now automate much larger parts of the scam and phishing infrastructure. We do have a talk about this where Fred talks about what’s possible at the moment, particularly regarding infrastructure automation with AI for phishing.

We have recently worked on voice scams and hopefully will have a study on this reasonably soon. Fred gave a talk mentioning this here. The article by Reuters was mentioned in some podcasts and received discussion online.

Most significantly, our research was cited by Senator Kelly in a formal request for a Senate hearing to examine the impact of AI chatbots and companions on older Americans, helping to motivate that hearing.

We have now published our results in a paper available on arXiv. It has been accepted at the AI Governance Workshop at the AAAI conference. Though there are some limitations to our study, we think that it is valuable to publish this end-to-end evaluation in the form of a paper. Human studies on the impacts of AI are still rare.

This research was supported by funding from Manifund, recommended by Neel Nanda.