Society would have you believe that self hosting a NAT Gateway is “crazy”, “irresponsible” and potentially even “dangerous”. But in this post I hope to shed some light into why someone would go down this path, the benefits, and my real experience when implementing this in a real engineering organization.

What even is a NAT Gateway

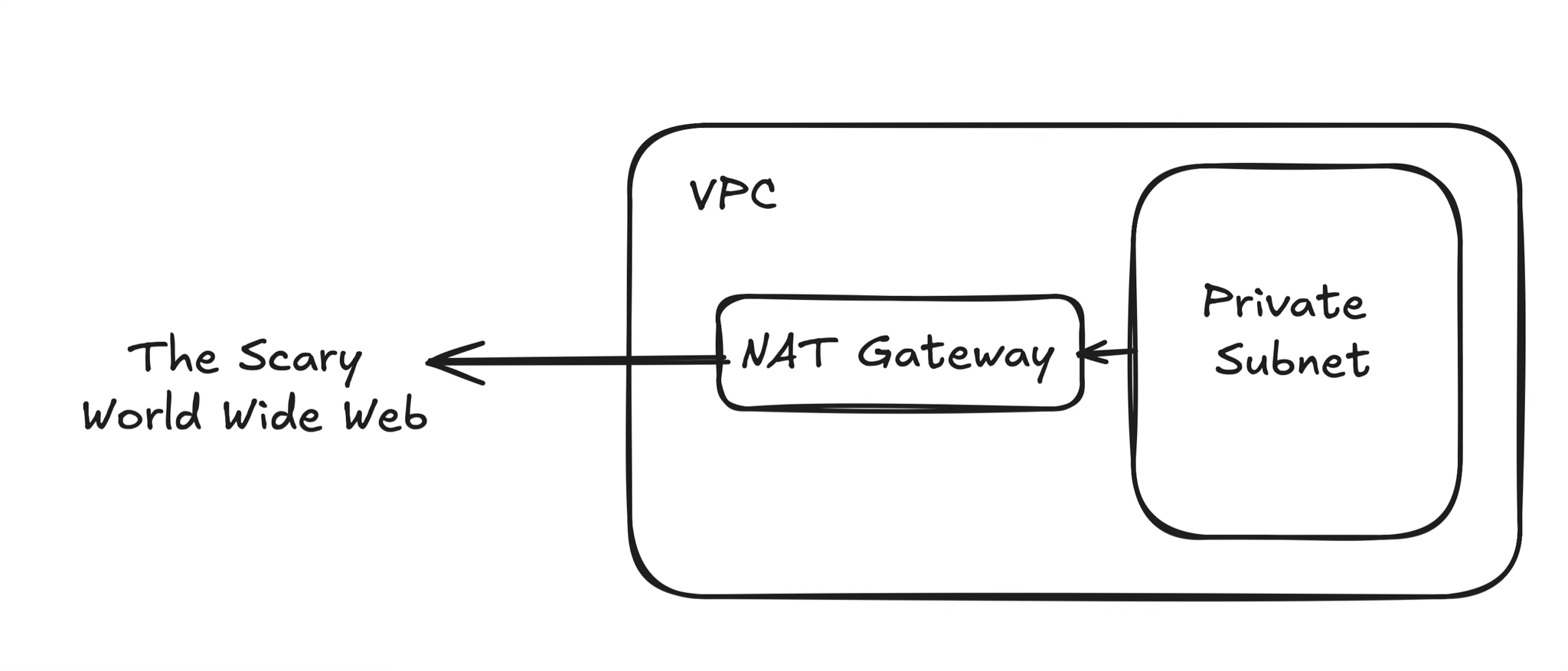

It's important to start with why. Why would someone even think about replacing a core part of AWS infrastructure. What does a NAT Gateway even do? For those unfamiliar, a NAT Gateway acts as a one way door to your private subnet to access the internet without allowing traffic in. This is important part of good network design. If traffic was allowed in, this would pose a massive security issue - anyone on the internet could reach your internal services. A NAT Gateway is a bouncer at a club - but this club only allows people out, no one can enter.

The problem that this creates is a bottleneck - your internal services have to talk to the internet (think any API call ever). Your entire infrastructure relies on the NAT Gateway to handle outbound internet traffic.

AWS has entered the chat

AWS is primed for this - folks need a high availability, high uptime NAT Gateway in order to function. And due to this requirement they can charge (in my opinion) an exorbitant amount to provide this service. What are you going to do? They can guarantee that this critical piece of infrastructure will scale & be highly available while your ChatGPT wrapper blows up!

DevOps & Infrastructure engineers know the pain of seeing the NAT Gateway hours & NAT Gateway Bytes line item on the AWS bill. Society breathing down your neck saying “There's nothing you can do about it” and “Think of it as the cost of doing business”. To them I say, you’re wrong, you can do anything you set your mind to.

Why would you even think of this?

Before diving into my implementation, I think it's important to state that this is not a one size fits all. I recently worked with Vitalize to speed up some of their Github Actions. We decided to self host Github runners in their private subnet, along with a very robust and deep set of integration tests that run on every PR. Because of this, the dominant cost tended to be NAT Gateway bytes, as there was an enormous amount of traffic that was going through their private subnets.

This was the major motivation behind starting to explore here. We still run NAT Gateways in production (for now), but in environments that are not as high of a risk, with major cost upside, the ability to delete potentially 10-15% of your daily AWS bill is quite appetizing (depending on how much NAT Gateway costs contribute to your overall spend).

Options

So you've made it this far - now it's time to start shopping around. The good news is that there exist heroes in the open source community who have done most of the hard parts for you. In my research, I came across 2 major options.

Option 1: Fck-NAT

This is the main alternative that folks find when first looking this up. Essentially this boils down to a purpose built AWS AMI image that Andrew Guenther has been maintaining. There are some limitations here, as noted on the public facing docs here, but in general it is quite straightforward. They have a terraform module that makes things fairly intuitive to set up, which I dive into deeper under the implementation section below.

Option 2: AlterNAT

This is another alternative that I thought deserved a special mention. Maintained by Chime here, this is a much more in depth and ‘production’ alternate to Fck-NAT.

As mentioned above, a self-hosted NAT Gateway (running on an EC2 instance) can end up becoming a bottleneck if anything were to happen to your EC2 instance. The way that AlterNAT/Chime has gotten around that is quite clever (and complex). From my rudimentary understanding, they use a mix of instances across availability zones (similar to Fck-NAT) to get ahead of downtime in a certain AZ. But they take it a step further by also employing Lambdas to constantly poll to ensure the EC2 instance is behaving as expected. In conjunction with standby NAT Gateways, this allows you to quickly failover to the AWS-managed NAT Gateway if an EC2 instance ever fails. While this will not result in 0 downtime, it can drastically reduce any disruption by automatically updating route tables.

I encourage folks to look at this repo as it is quite feature filled. I’ve also attached their network diagram below. We did not end up using it since it was a bit overkill for the objective we had. Additionally, it relies on standby NAT gateways, which I was trying to fully eliminate. If I ever rolled this out to production, this would be the approach I would take.

Implementation

In this implementation, since this was primarily an exercise in cost cutting, I decided to go with Fck-NAT. If this was a production environment, the fallback mechanism and robustness of AlterNAT is much more appealing. But truly in this case I wanted to delete the NAT Gateway cost completely from our development environment.

I ended up going with the official terraform module suggested by Fck-NAT. You can see an excerpt from our network module below.

module "fck_nat" {

source = "RaJiska/fck-nat/aws"

version = "1.3.0"

count = var.use_fck_nat ? 2 : 0

name = "${var.company_name}-fck-nat-${count.index + 1}"

vpc_id = aws_vpc.main.id

subnet_id = module.subnets.public_subnet_ids[count.index]

instance_type = var.fck_nat_instance_type

tags = {

Name = "${var.company_name}-fck-nat-${count.index + 1}"

Environment = var.env

}

}

We implemented it by using 2 t4g.nano instances. Implementing it resulted in about 15 - 30 seconds of downtime in our development environment which was done in the middle of the night to avoid any angry devs.

Results

In our case, the results were quite dramatic. To start, we were able to cut out NATGateway-Hours by 50%. We maintain a development and production environment, and we fully killed the NAT Gateway in dev:

But the more surprising, and dramatic, cost saving were in around NATGateway-Bytes. As mentioned, in this case we had self-hosted Github Runners and preview environments that pushed a lot of traffic when developers were active. During the week, we would routinely see upwards of $30-$40 of traffic per day. After rolling out this change, the highest we’ve seen is closer to $6 at most.

In this case, I think a lot of this was driven by two main factors:

- Every PR we had would create a preview environment that would then run a whole suite of playwright tests. This would run for every PR, for every commit. Though the overhead on compute was quite minimal since they were not very demanding, I believe the amount of traffic contributed to this.

- I believe the main cost from the self hosted runners was actually streaming the logs back to Github. I spot checked a few of our tests (unit, integration, etc), and almost every single log file I would download from Github would be ~40-50mb in size. Doing some math, about 5-6 tests per commit per PR means about 250MB per commit, and assuming the average PR has about 5 commits, that's about 1.25GB of data being streamed back to Github (and through our AWS NAT Gateway) per PR. That can easily start adding up, and I believe also contributed to our high costs.

Another interesting data point that might be relevant for anyone thinking about implementing this: in our implementation, as mentioned, we went with two t4g.nano instances. During the week, we would see peaks of 800GB-900GB of traffic daily. But these two instances have been able to easily handle this load, with no degradation that can be felt by developers.

In total, across these two major costs, we've seen in general about a 70% cost reduction in NAT Gateway costs, which has been quite impactful for our total daily spend in this organization.

Conclusion

It may not be for all organizations, but if you find yourself bleeding money into NAT Gateways, and you happen to have environments where the stakes are low (e.g. a development or staging environment), self hosting a NAT Gateway is a lot simpler than you'd expect. The open source community has made this really simple with out of the box terraform.

Sometimes, society can be wrong. Change requires risk takers - bold humans who choose not to listen to the status quo. You only live once - self host a NAT Gateway.