AI is transforming peer review — and many scientists are worried

原始链接: https://www.nature.com/articles/d41586-025-04066-5

最近由《前沿》进行的1600名学者调查显示,超过50%的人现在在同行评审过程中使用人工智能工具,近25%的人在过去一年中增加了使用频率。研究人员主要使用人工智能来协助撰写报告(59%)、总结稿件和识别差距(29%)以及检测潜在的不当行为(28%)。 虽然一些出版商,如《前沿》,允许有限且已披露的人工智能使用,但人们仍然担心将未发表的稿件上传到外部人工智能平台,因为存在保密性和知识产权风险。该调查强调了一种脱节——研究人员*正在*使用人工智能,尽管有反对的建议——并呼吁出版商调整政策以适应这种“新现实”。 研究,包括一项测试GPT-5的研究,表明人工智能可以模仿报告结构,但通常缺乏建设性的反馈,并且可能产生事实错误。尽管如此,趋势是明确的:人工智能正在影响同行评审,促使人们需要负责任地实施,并制定明确的指南、人工监督和适当的培训。

Survey results suggest that peer reviewers are increasingly turning to AI.Credit: Panther Media Global/Alamy

More than 50% of researchers have used artificial intelligence while peer reviewing manuscripts, according to a survey of some 1,600 academics across 111 countries by the publishing company Frontiers.

Nearly one-quarter of respondents said that they had increased their use of AI for peer review over the past year. The findings, posted on 11 December by the publisher, which is based in Lausanne, Switzerland, confirm what many researchers have long suspected, given the ubiquity of tools powered by large-language models such as ChatGPT.

“It’s good to confront the reality that people are using AI in peer-review tasks,” says Elena Vicario, Frontiers’ director of research integrity. But the poll suggests that researchers are using AI in peer review “in contrast with a lot of external recommendations of not uploading manuscripts to third-party tools”, she adds.

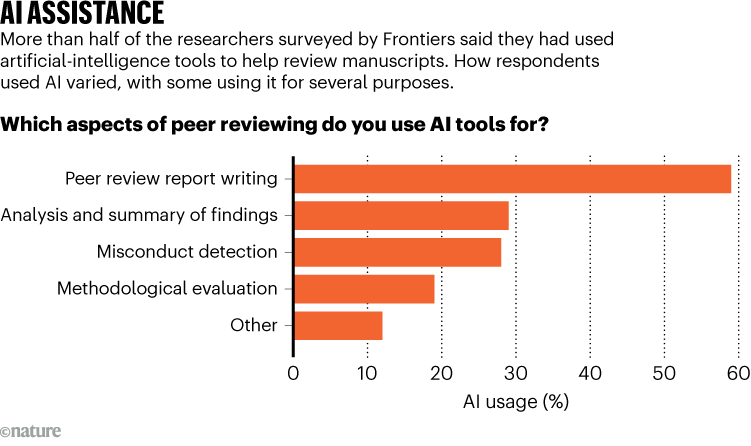

Source: Unlocking AI’s untapped potential, Frontiers

Some publishers, including Frontiers, allow limited use of AI in peer review, but require reviewers to disclose it. Like most other publishers, Frontiers forbids reviewers from uploading unpublished manuscripts to chatbot websites because of concerns about confidentiality, sensitive data and compromising authors’ intellectual property.

The survey report calls on publishers to respond to the growing use of AI across scientific publishing and implement policies that are better suited to the ‘new reality’. Frontiers itself has launched an in-house AI platform for peer reviewers across all of its journals. “AI should be used in peer review responsibly, with very clear guides, with human accountability and with the right training,” says Vicario.

“We agree that publishers can and should proactively and robustly communicate best practices, particularly disclosure requirements that reinforce transparency to support responsible AI use,” says a spokesperson for the publisher Wiley, which is based in Hoboken, New Jersey. In a similar survey published earlier this year, Wiley found that “researchers have relatively low interest and confidence in AI use cases for peer review,” they add. “We are not seeing anything in our portfolio that contradicts this.”

The Frontiers’ survey found that, among the respondents who use AI in peer review, 59% use it to help write their peer-review reports. Twenty-nine per cent said they use it to summarize the manuscript, identify gaps or check references. And 28% use AI to flag potential signs of misconduct, such as plagiarism and image duplication (see ‘AI assistance’).

Mohammad Hosseini, who studies research ethics and integrity at Northwestern University Feinberg School of Medicine in Chicago, Illinois, says the survey is “a good attempt to gauge the acceptability of the use of AI in peer review and the prevalence of its use in different contexts”.

AI is transforming peer review — and many scientists are worried

Some researchers are running their own tests to determine how well AI models support peer review. Last month, engineering scientist Mim Rahimi at the University of Houston in Texas designed an experiment to test whether the large language model (LLM) GPT-5 could review a Nature Communications paper1 he co-authored.

He used four different set-ups, from entering basic prompts asking the LLM to review the paper without additional context to providing it with research articles from the literature to help it to evaluate his paper’s novelty and rigour. Rahimi then compared the AI-generated output with the actual peer-review reports that he had received from the journal, and discussed his findings in a YouTube video.

His experiment showed that GPT-5 could mimic the structure of a peer-review report and use polished language, but that it failed to produce constructive feedback and made factual errors. Even advanced prompts did not improve the AI’s performance — in fact, the most complex set-up generated the weakest peer review. Another study found that AI-generated reviews of 20 manuscripts tended to match human ones but fell short on providing detailed critique.