Did you know...?LWN.net is a subscriber-supported publication; we rely on subscribers to keep the entire operation going. Please help out by buying a subscription and keeping LWN on the net.

By Joe Brockmeier

December 15, 2025

Version 8.16.0 of the calibre ebook-management software, released on December 4, includes a "Discuss with AI" feature that can be used to query various AI/LLM services or local models about books, and ask for recommendations on what to read next. The feature has sparked discussion among human users of calibre as well, and more than a few are upset about the intrusion of AI into the software. After much pushback, it looks as though users will get the ability to hide the feature from calibre's user interface, but LLM-driven features are here to stay and more will likely be added over time.

Amir Tehrani proposed adding an LLM query feature directly to calibre in August 2025:

I have developed and tested a new feature that integrates Google's Gemini API (which can be abstracted to any compatible LLM) directly into the Calibre E-book Viewer. My aim is to empower users with in-context AI tools, removing the need to leave the reading environment. The results: capability of instant text summarization, clarification of complex topics, grammar correction, translation, and more, enhancing the reading and research experience.

Kovid Goyal, creator and maintainer of calibre, quickly voiced approval. He dismissed the idea that it might bother some calibre users and suggested that Tehrani submit a pull request for the feature. On August 10, Tehrani submitted the patches, and Goyal later merged them into mainline after refactoring the code. He provided a description of the additional LLM features he had in mind as well:

There are likely going to be new APIs added to all backends to support things like generating covers, finding what to read next, TTS [text-to-speech], grammar and style fixing in the editor and possibly metadata download.

Goyal did promise

that calibre would "never ever use any third party service without

explicit opt-in

".

Discuss removing the feature

It did not take long after the Discuss feature was released for users to start asking for its removal. User "msr" on the Mobileread forum started a thread to ask if there was a way to block or hide all AI features:

I generally find the AI-push to be morally repugnant (among other things, I am an author whose work has been stolen for training) and I hate to see these features creep into software I use. I have zero interest in ever using so-called AI for anything.

Goyal replied

that the features do nothing unless they are enabled. "The worst

you get is a few menu entries. Simply ignore them.

"

Other users echoed the anti-AI sentiment. "Quoth" said

they would not update calibre until the feature was scrapped. "It's

a thin end of a wedge and encouraging people to use these over-hyped

LLMs, even though off by default.

" Goyal replied

that it is in calibre to stay:

It's not going to be scrapped, so good bye, I guess. You are more than welcome to not use AI if you don't want to. calibre very nicely makes that easy for you by having it off by default to the extent that the AI code is not even loaded unless you enable it. What you DO NOT get to do is try to make that choice for other people.

What's added so far

The feature is displayed in the calibre user interface by default; it shows up in the View menu as "Discuss selected books with AI". The naming is unfortunate on its own. Calling the process of sending queries to an LLM provider a discussion encourages people to anthropomorphize the tools and furthers the misconception that these tools "think" in the way that people do. Whatever value the responses may have, they do not reflect actual thought.

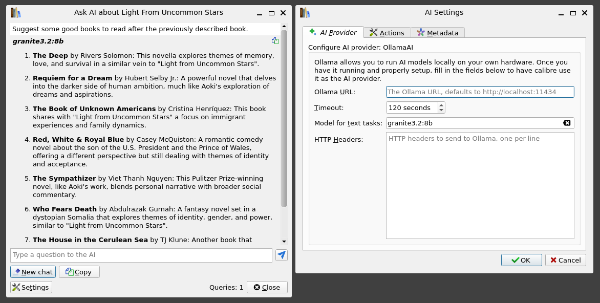

As Goyal pointed out, though, the Discuss feature does not work until an LLM provider is configured. If a user attempts to use it without doing so, calibre displays a dialog that directs the user to configure a provider first. Each provider is supplied as a separate plugin. Currently, calibre users have a choice of commercial providers, or running models locally using LM Studio or Ollama.

The Discuss feature shows up as a plugin as well. It is located in the calibre preferences in the "User interface action" category. However, it is a plugin that cannot be disabled or removed; nor can any of the other alleged plugins in that category. It seems fair to question whether something is actually a "plugin" if it cannot be unplugged. The separate provider plugins, in the "AI provider" category, can be disabled or removed, though. The provider plugins are enabled by default, but they do nothing until a user supplies credentials of some kind.

Users do not need to worry about accidentally enabling a feature that sends data off to a provider, because it is impossible to accidentally configure the plugins. For example, the GitHub AI provider requires an access token before it will work, and Google's AI provider needs an API key to function. Using a local provider requires the user to actually have LM Studio or Ollama set up, and then jump through a couple of hoops to enable them.

Even if a user wants to query an LLM about a book, they may encounter problems. I tried setting calibre up to use GitHub AI, but even after appearing to have successfully configured it as provider with the token, I had no luck. I could send queries, but received no reply. I was able to get calibre working with Ollama, though the experience was not particularly compelling.

Responses from GitHub AI or Ollama about books are of little interest to me; a model may have ingested a million or more books as it was trained, but it hasn't read a single one, nor had any life experience that could spark an insight or reaction. Thoughtful discussions of books with well-read people with real perspectives, on the other hand, would be delightful—but beyond calibre's capabilities to provide.

Hide AI

Despite dismissing complaints the addition of AI, Goyal has grudgingly accepted

a pull

request to hide AI features. He said that anyone offended by a few

menu entries is not worth worrying about but, "I don't particularly

mind having a tweak just to hide the menu entries, but that is all it

should do

". He added that someone would need to supply patches to

hide additional AI functionality in the future. "That someone

isn't going to be me as I don't have the patience to waste my time

catering to insanity.

"

A "remove slop" pull request from "Ember-ruby" that would have stripped out AI features from calibre was rejected without comment. The calibre forked repository with those patches may be of interest, however, to those interested in forking calibre.

At least two forks have been announced so far; one seems to have

only gotten so far as the name, clbre

"because the AI is stripped out

". To date the only work that

has shown up in that repository is to update the

README. Xandra Granade announced

rereading on

December 9; that project is currently working on a fork called arcalibre,

but its goals are limited to a snapshot of calibre "with all AI

antifeatures removed

" that can be used for future forks of

calibre. No new features are planned for arcalibre.

The rereading draft charter suggests that the project will develop additional applications based on arcalibre. It is, of course, far too early to say whether the project will produce anything interesting in the long term. Any future forkers should note that the name "Excalibre" is right there for the taking.

Resistance seems futile

No doubt part of calibre's audience is pleased to see the feature; but it has proven to be an unwelcome addition for some of calibre's users. It is not surprising that those users have asked for it to be removed or changed in such a way that it can be hidden.

It has been a disappointing year overall for Linux and open-source enthusiasts who object to the seemingly relentless AI-ification of everything. It is fairly commonplace at this point to see companies shoving AI features into proprietary software whether the features actually make sense or not. However, an open-source project like calibre has no shareholders to please by ticking off the "AI inside" box, so few people would have had "adds AI" to their calibre bingo card for 2025.

An AI feature landing in calibre seems a fitting coda to the recurrent theme of AI and open source in 2025; whether users want to engage with AI or not, it is seemingly inescapable. One might wonder if AI has come to calibre, a project with no commercial incentive to add it, is there is no refuge to be had from it at all?

Bitwarden, which makes an open-source password manager and server, is now accepting AI-generated contributions, as is the KeePassXC password-manager project. Even projects like Fedora and the Linux kernel are accepting or leaning toward accepting LLM-assisted contributions; Mozilla is all-in on AI and pushing it into Firefox as well. This is not an exhaustive list of AI-friendly projects, of course; it would be exhausting to try to compile one at this point.

In most cases, though, users still have options without LLM features. When it comes to calibre, there is no alternative to turn to. Then again, there was no real alternative to calibre before it adopted "Discuss with AI", either. There are many open-source programs that handle reading ebooks; that is well-covered territory. Some, like Foliate, are arguably better than calibre at that task.

But there is no other ebook-management software (open source or

otherwise) that has all of

calibre's conversion features and support for exporting to such a

wide variety of ebook readers. Evan Buss attempted a calibre

alternative, called 22,

in 2019. Buss threw in the towel after learning "ebook managers are

much more difficult to get right than I had previously imagined

",

and maintaining compatibility with calibre "proved near

impossible

". Phil Denhoff started the Citadel

project in late 2023. It looked like a promising calibre-compatible

ebook-library manager, but its last

release was in October 2024. Denhoff continues to make commits to the

repository, though, so one might still hold out hope for the

project.

While the lack of alternatives is frustrating for some, it is not Goyal's fault. The fact that the open-source community, to date, has not produced anything else that can fill in for calibre is not his problem. It is not his responsibility to take the program in any particular direction, nor is he obliged to entertain user complaints. Whether users love or loathe seeing calibre adding LLM features, it's up to its maintainer to decide what gets in and what doesn't.

For now, the AI-objectors on Linux have a few options. One is to live with lurking LLM features, or stick with calibre versions before 8.16.0. Goyal has made it easy to revert to an older version; the download.calibre.com site seems to have all prior releases of calibre going back to its pre-1.0 days. The Download for Linux page has instructions on reverting to previous versions, too. Those who get calibre from their Linux distribution may be LLM-free for some time without taking any action. Debian 13 ("trixie") users, for example, should be on the 8.5.0 branch for the remainder of the release's lifetime. Fedora 42 is still on the 8.0 branch, and Fedora 43 is on 8.14. Rawhide has 8.16.2, though, so users are likely to get the Discuss feature in Fedora 44.

The strong reaction against calibre's Discuss feature may seem more emotional than logical. It is also understandable. Books are a human endeavor, even those that are in electronic format. AI models have often been trained by plundering a corpus of books, without respect for the author's wishes or copyright. Suggesting that the readers now turn to the technologies that seek to replace humans to supplement their reading experience is, for some at least, deeply offensive. It is a little puzzling that Goyal, who has catered to a large audience of book lovers for nearly 20 years, seems not to understand that.