“Move faster and break even more things.”

That twist on Silicon Valley’s old mantra echoes through recent engineering circles as “vibe coding” enters the chat. Yes, AI-assisted development is transforming how we build software, but it’s not a free pass to abandon rigor, review, or craftsmanship. "Vibe coding" is not an excuse for low-quality work.

Let’s acknowledge the good: AI-assisted coding can be a game-changer. It lowers barriers for new programmers and non-programmers, allowing them to produce working software by simply describing what they need. This unblocks creativity – more people can solve their own problems with custom software, part of a trend some call the unbundling of personal software (using small AI-built tools instead of one-size-fits-all apps). Even experienced engineers stand to benefit.

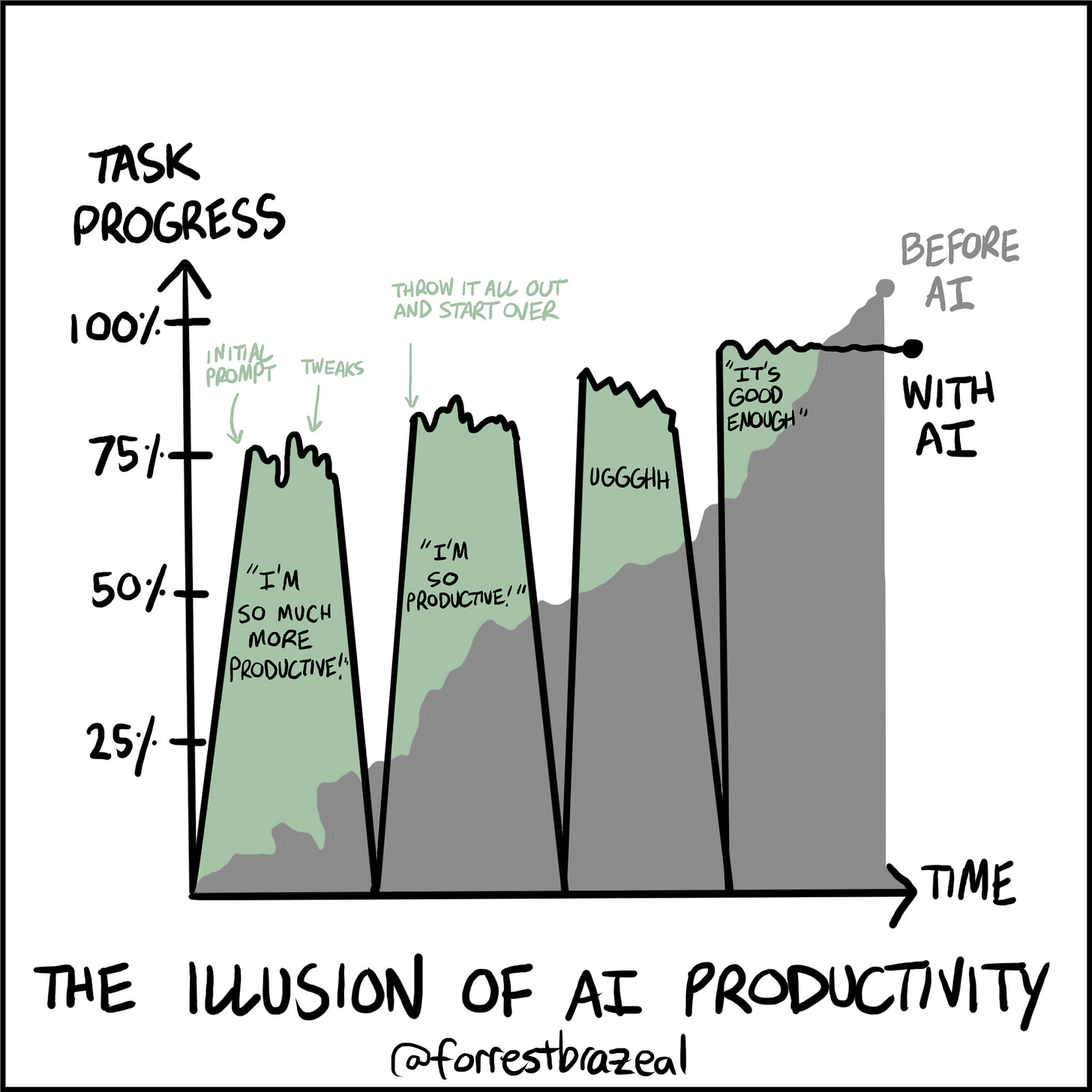

However, as any seasoned engineer will tell you, speed means nothing if the wheels fall off down the road. And this is where the cracks begin to show – in the gap between the vibe and the reality of building maintainable, robust software.

For all the hype, vibe coding has earned plenty of skepticism from veteran developers. The core critique: just because an AI can spit out code quickly doesn’t mean that code is any good. In fact, it can be downright dangerous to accept AI-generated output at face value. The joking complaint that “two engineers can now create the tech debt of fifty” contains a grain of truth. Unchecked AI-generated code can massively amplify technical debt, the hidden problems that make software brittle and costly to maintain.

Many early vibe-coded projects look good on the surface (“it works, ship it!”) but hide a minefield of issues: no error handling, poor performance, questionable security practices, and logically brittle code. One might say such projects are built on sand. I’ve used the term “house of cards code” – code that “looks complete but collapses under real-world pressure”. If you’ve ever seen a junior developer’s first big feature that almost works but crumbles with one unexpected input, you get the idea. AI can churn out a lot of code quickly, but volume ≠ quality.

"AI is like having a very eager junior developer on your team" an idea well illustrated in this illustration by .

The dangers aren’t purely hypothetical. Consider maintainability: Who will maintain an AI-written module if it’s obscure or overly complex? If even the original developer doesn’t fully understand the AI’s solution, future modifications become nightmares. Security is another huge concern – AI might generate code that appears to work but has SQL injection flaws or unsafe error handling. Without rigorous review, these can slip into production. There’s also the risk of overfitting to the prompt: an AI will do exactly what you ask, which might not be exactly what you truly need. Human coders often adjust a design as they implement, discovering misassumptions along the way. AI won’t catch those misassumptions unless the human in the loop notices and corrects it.

None of this is to say AI can’t write good code – it sometimes does – but rather that context, scrutiny, and expertise are required to discern good from bad. In 2025, we are essentially using a very eager but inexperienced assistant. You wouldn’t let a first-year junior dev architect your entire system unsupervised; similarly you shouldn’t blindly trust an AI’s code without oversight. The hype of “AI magic” needs to meet the reality of software engineering principles.

So, how do we strike the balance? The key is not to throw vibe coding out entirely – it can be incredibly useful – but to integrate it in a disciplined way. Engineers must approach AI assistance as a tool with known limitations, not a mystical code genie. In practice, that means keeping the human in the loop and maintaining our standards of quality. Let’s explore what that looks like.

To use vibe coding effectively, change your mindset: treat the AI like a super-speedy but junior developer on your team. In other words, you – the senior engineer or team lead – are still the one responsible for the outcome. The AI might crank out the first draft of code, but you must review it with a critical eye, refine it, and verify it meets your quality bar.

Experienced developers who successfully incorporate AI follow this pattern intuitively. When an AI assistant suggests code, they don’t just hit “accept” and move on. Instead, they:

Read and understand what the AI wrote, as if a junior dev on their team wrote it.

Refactor the code into clean, modular parts if the AI’s output is monolithic or messy (which it often is). Senior engineers will break the AI’s blob into “smaller, focused modules” for clarity.

Add missing edge-case handling. AI often misses corner cases or error conditions, so the human needs to insert those (null checks, input validation, etc.)

Strengthen types and interfaces. If the AI used loose types or a leaky abstraction, a human can firm that up, turning implicit assumptions into explicit contracts

Question the architecture. Did the AI choose an inefficient approach? Maybe it brute-forced something that should use a more optimal algorithm, or perhaps it introduced global state where a pure function would suffice. A human should examine these decisions critically.

Write tests (or at least, manually test the code’s behavior). Treat AI code like any PR from a coworker: it doesn’t go in until it’s tested. If the AI wrote unit tests (some tools do), double-check those tests aren’t superficial.

By doing this, you inject engineering wisdom into the AI-generated code. The combination can be powerful – the AI gets you a lot of code quickly, and your oversight ensures it’s solid. In fact, studies and anecdotes suggest senior devs get more value from AI coding tools than juniors. The reason is clear: seniors have the knowledge to steer the AI properly and fix its mistakes. Juniors may be tempted to treat the AI as an infallible authority, which it isn’t.

So, a critical rule emerges: Never accept AI-written code into your codebase unreviewed. Treat it like code from a new hire: inspect every line, ensure you get it. If something doesn’t make sense to you, don’t assume the AI knows better – often it doesn’t. Either refine the prompt to have the AI clarify, or rewrite that part yourself. Consider AI output as a draft that must go through code review (even if that review is just you). On a team, this means if a developer used AI to generate a chunk of code, they should be prepared to explain and defend it in the code review with peers. “It works, trust me” won’t fly – the team needs confidence that the code is understandable and maintainable by humans.

Another best practice: keep humans in the driver’s seat of design. Use the AI to implement, not to decide fundamental architectures. For example, you might use vibe coding to quickly create a CRUD REST API based on an existing schema – that’s well-defined work. But you shouldn’t ask the AI to “design a scalable microservice architecture for our product” and then blindly follow it. High-level design and critical decisions should remain human-led, with AI as a helper for the tedious parts. In essence, let the AI handle the grunt work, not the brain work.

Communication and documentation also become crucial. If you prompt an AI to generate a non-trivial algorithm or use an unfamiliar library, take the time to document why that solution was chosen (just as you would if you wrote it yourself after research). Future maintainers – or your future self – shouldn’t be left guessing about the intent behind AI-crafted code. Some teams even log the prompts used to generate important code, effectively documenting the “conversation” that led to the code. This can help when debugging later: you can see the assumptions that were given to the AI.

In summary, human oversight isn’t a “nice-to-have” – it’s mandatory. The moment you remove the human from the loop, you’re just rolling dice on your software quality. Until AI can truly replace a senior engineer’s holistic understanding (we’re not there yet), vibe coding must be a partnership: AI accelerates, human validates.

Let’s crystallize the discussion into some actionable rules and best practices for teams adopting AI-assisted development. Think of these as the new “move fast, but don’t break everything” handbook – a set of guardrails to keep quality high when you’re vibing with the code.

Rule 1: Always Review AI-Generated Code – No exceptions. Every block of code that AI produces should be treated as if a junior engineer wrote it. Do a code review either individually or with a peer. This includes code from Copilot, ChatGPT, Cursor, or any AI agent. If you don’t have time to review it, you don’t have time to use it. Blindly merging AI code is asking for trouble.

Rule 2: Establish Coding Standards and Follow Them – AI tools will mimic whatever code they were trained on, which is a mixed bag. Define your team’s style guides, architecture patterns, and best practices, and ensure that any AI-generated code is refactored to comply. For instance, if your rule is “all functions need JSDoc comments and unit tests,” then AI output must get those comments and tests before it’s done. If your project uses a specific architecture (say, layered architecture with service/repository classes), don’t let the AI shove some ad-hoc database calls in UI code – fix it to fit your layers. Consider creating linting or static analysis checks specifically for common AI mistakes (e.g. flagging use of deprecated APIs or overly complex functions). This automates quality control on AI contributions.

Rule 3: Use AI for Acceleration, Not Autopilot – In practice, this means use vibe coding to speed up well-understood tasks, not to do thinking for you. Great uses: generate boilerplate, scaffold a component, translate one language to another, draft a simple algorithm from pseudocode. Risky uses: have the AI design your module from scratch with minimal guidance, or generate code in a domain you don’t understand at all (you won’t know if it’s wrong). If you intend to keep the code, don’t stay in vibe mode – switch into engineering mode and tighten it up.

Rule 4: Test, Test, Test – AI doesn’t magically guarantee correctness. Write tests for all critical paths of AI-written code. If the AI wrote the code, it may even help you write some tests – but don’t rely solely on AI-generated tests, as they might miss edge cases (or could be falsely passing due to the same flawed logic). Do manual testing too, especially for user-facing features: click through the UI, try odd inputs, see how it behaves. Many vibe-coded applications work fine for the “happy path” but fall apart with unexpected input – you want to catch that before your users do.

Rule 5: Iterate and Refine – Don’t accept the first thing the AI gives you if it’s not satisfactory. Vibe coding is an iterative dialogue. If the initial output is clunky or confusing, you can prompt the AI to improve it (“simplify this code,” “split this into smaller functions,” etc.). Or you can take the draft and refactor it yourself. Often, a good approach is using the AI in cycles: prompt for an implementation, identify weaknesses, then either prompt fixes or manually adjust, and repeat.

Rule 6: Know When to Say No – Sometimes, vibe coding just isn’t the right tool. Part of using it responsibly is recognizing scenarios where manual coding or deeper design work is needed. For example, if you’re dealing with a critical security module, you probably want to design it carefully and maybe only use AI to assist with small pieces (if at all). Or if the AI keeps producing a convoluted solution to a simple problem, stop and write it yourself – you might save time in the end. It’s important not to become overly reliant on the AI to solve every problem. Don’t let “AI did it” become an excuse for not understanding your own code. If after a few attempts the AI isn’t producing what you need, take back control and code it the old-fashioned way; you’ll at least have full understanding then.

Rule 7: Document and Share Knowledge – Ensure that any code coming from AI is documented just as thoroughly as hand-written code (if not more). If there were non-obvious decisions or if you suspect others might be confused by what the AI produced, add comments. In team discussions, be open about what was AI-generated and what wasn’t. This helps reviewers pay extra attention to those sections.

Following these rules, teams can enjoy the productivity perks of vibe coding while mitigating the worst risks. Think of it as augmenting human developers, not replacing them. The goal is to co-create with AI: let it handle the repetitive drudge work at high speed, while humans handle the creative and critical thinking parts.

It’s also important to recognize where vibe coding shines and where it doesn’t. Not every project or task is equally suited to an AI-driven workflow. Here’s a breakdown drawn from industry discussions and early experiences:

👍 Great Use Cases:

Rapid prototyping is perhaps the sweet spot of vibe coding. If you have an idea for a small app or feature, using an AI assistant to throw together a quick prototype or proof-of-concept can be incredibly effective. In such cases, you don’t mind if the code is a bit hacky; you just want to validate the idea. Many engineers have found success in weekend projects using only AI to code – a fun way to test a concept fast. Another good use case is one-off scripts or internal tools: e.g., a script to parse a log file, a small tool to automate a personal task, or an internal dashboard for your team. These are typically low-stakes; if the script breaks, it’s not the end of the world. Here, vibe coding can save time because you don’t need production-grade perfection, just something that works for now.

Vibe coding also works well for learning and exploration. If you’re working in a new language or API, asking an AI to generate examples can accelerate your learning. (Of course, double-check the AI’s output against official docs!) . In exploratory mode, even if the AI’s code isn’t perfect, it gives you material to tinker with and learn from. It’s like having a teaching assistant who can show you attempts, which you then refine.

Additionally, AI code generation can excel at structured, boilerplate-heavy tasks. Need to create 10 similar data classes? Or implement a rote CRUD layer? AI is great at that kind of mechanical repetition, freeing you from the tedium. As long as the pattern is clear, the AI will follow it and save you keystrokes (just verify it followed the pattern correctly).

👎 Not-So-Great Use Cases:

On the flip side, enterprise-grade software and complex systems are where vibe coding often falls flat. Anything that requires a deep understanding of business logic, heavy concurrency, rigorous security, or compliance is not something to fully trust to AI generation. The AI doesn’t know your business constraints or performance requirements unless you explicitly spell them out (and even then, it may not get it right). For example, a fintech application handling payments or an aerospace control system must meet standards that current AI simply isn’t equipped to guarantee. In these domains, AI can assist in parts, but human expertise and careful QA are paramount for the final product.

Vibe coding also struggles with long-term maintainability. If you’re building a codebase that will live for years and be worked on by many developers, starting it off with a hodge-podge of AI-generated code can be a poor foundation. Without strong guidance, the architecture might be inconsistent. It’s often better to spend extra time up front building a clean framework (with or without AI help) than to patchwork a whole product via successive AI prompts. Many early adopters have observed that the initial time saved by vibe coding can be lost later during code cleanup and refactoring when the project needs to scale or adapt.

Another scenario to be wary of is critical algorithms or optimizations. AI can produce a sorting algorithm or a database query, sure – but if you need it to be highly optimized (say, a custom memory management routine, or an algorithm that must run in sub-linear time), you’re in territory where human ingenuity and deep understanding are still superior. AI might give you something that works on small data, but falls over at scale, and it won’t necessarily warn you about that. A human performance engineer would design and test with those considerations in mind from the start.

Finally, any situation where explainability and clarity are top priorities might not be ideal for vibe coding. Sometimes you need code that other people (or auditors) can easily read and reason about. If the AI comes up with a convoluted approach, it could hinder clarity. Until AI can reliably produce simple and clearly structured code (which it’s not always incentivized to do – sometimes it’s overly verbose or oddly abstract), a human touch is needed to keep things straightforward.

In summary, vibe coding is a powerful accelerator, but not a silver bullet.

Use it where speed matters more than polish, and where you have the leeway to iterate and fix things. Avoid using it as a one-shot solution for mission-critical software – that’s like hiring a race car driver to drive a school bus; wrong tool for the job. Maybe one day AI will be so advanced that vibe coding truly can be the default for all development – but today is not that day. Today, it works best as a helper for the right problems and with the right oversight.

Vibe coding, and AI-assisted software development in general, represents a thrilling leap forward in our tools. It’s here to stay, and it will only get more sophisticated from here. Forward-looking engineering teams shouldn’t ignore it – those who harness AI effectively will likely outpace those who don’t, just as teams that embraced earlier waves of automation and better frameworks outpaced those writing everything from scratch. The message of this article is not to reject vibe coding, but to approach it with eyes open and with engineering discipline intact.

The big takeaway is that speed means nothing without quality. Shipping buggy, unmaintainable code faster is a false victory – you’re just speeding towards a cliff. The best engineers will balance the two: using AI to move faster without breaking things (at least not breaking things any more than we already do!). It’s about finding that sweet spot where AI does the heavy lifting and humans ensure everything stands up properly.

For tech leads and engineering managers, the call to action is clear: set the tone that AI is a tool to be used responsibly. Encourage experimentation with vibe coding, but also establish the expectations (perhaps via some of the rules we outlined) that safeguard your codebase. Make code reviews mandatory for AI-generated contributions, create an environment where asking “hey, does this make sense?” is welcome, and invest in upskilling your team to work with AI effectively. This might even mean training developers on how to write good prompts or how to evaluate AI suggestions critically. It’s a new skill set, akin to the shift to high-level languages or to distributed version control in the past – those who adapt sooner will reap benefits.

We should also keep in perspective what truly matters in software engineering: solving user problems, creating reliable systems, and continuously learning. Vibe coding is a means to an end, not an end itself. If it helps us serve users better and faster, fantastic. But if it tempts us to skip the due diligence that users ultimately rely on (like quality and security), then we must rein it in. The fundamentals – clear thinking, understanding requirements, designing for change, testing thoroughly – remain as important as ever, if not more so.

In the end, perhaps the ethos should be: “Move fast, but don’t break things – or if you do, make sure you know how to fix them.” Leverage the vibes to code at light speed, but back it up with the solid bedrock of engineering excellence. AI can coexist with craftsmanship; in fact, in the hands of a craftsman, it can be a powerful chisel. But the craftsman’s hand is still needed to guide that chisel to create something truly enduring and well-made.

So, vibe on, developers – just do it with care. Embrace the future, but don’t abandon the principles that got us here. Vibe coding is not an excuse for low-quality work; rather, it’s an opportunity to elevate what we can achieve when we pair human judgment with machine generative power. The teams that internalize this will not only move fast – they’ll build things worth keeping.

Happy coding, and keep the vibes high and the quality higher.